Dataset Update

When the data in the database changes, the updated content needs to be synchronized to the dataset.

Database Data Change Classification

Column Type Changes

When the type of a certain field in the database changes, in the HENGSHI system, you need to use the updated data type, you must update the data, and then manually change the field type to the changed type in the field management.

Add Rows/Delete Rows in Table

When row data in the database is updated, in the HENGSHI system, the behavior of directly connected datasets created from this table and datasets with the acceleration engine enabled is different:

Direct Connection Dataset: When row data in the database is updated, refreshing the webpage does not update the dataset metadata, but changes in row data caused by adding rows/deleting rows are reflected in the dataset (newly added rows are visible in the dataset, and deleted rows no longer appear in the dataset).

Dataset with Acceleration Engine Enabled: When row data in the database is updated, refreshing the webpage does not update the dataset's metadata or row data;

After executing the data update, both the metadata and data are updated.

Add Column to Table

When new fields are added to a table in the database and you need to view these new fields in the HENGSHI system, you must update the data. After the update, the new fields do not appear in the Data Management page but are listed under the "Disabled Fields" group in the Field Management section, where users can customize whether to enable these fields.

Delete Column

Deleting some fields in the database table will affect the operations of that table in the HENGSHI system, which can be broadly categorized into two types:

When deleting unreferenced columns: In the HENGSHI system, datasets created based on this table only display the table headers, and after updating the data, the datasets display normally;

When deleting a column that is depended on by newly added columns: In the HENGSHI system, datasets created based on this table need to delete the newly added columns that depend on this column before the dataset can be restored by updating the data.

Data Update

Data updates primarily involve updating the metadata of datasets. Data updates support Immediate Update, Update Schedule, Incremental Update, and Full Update.

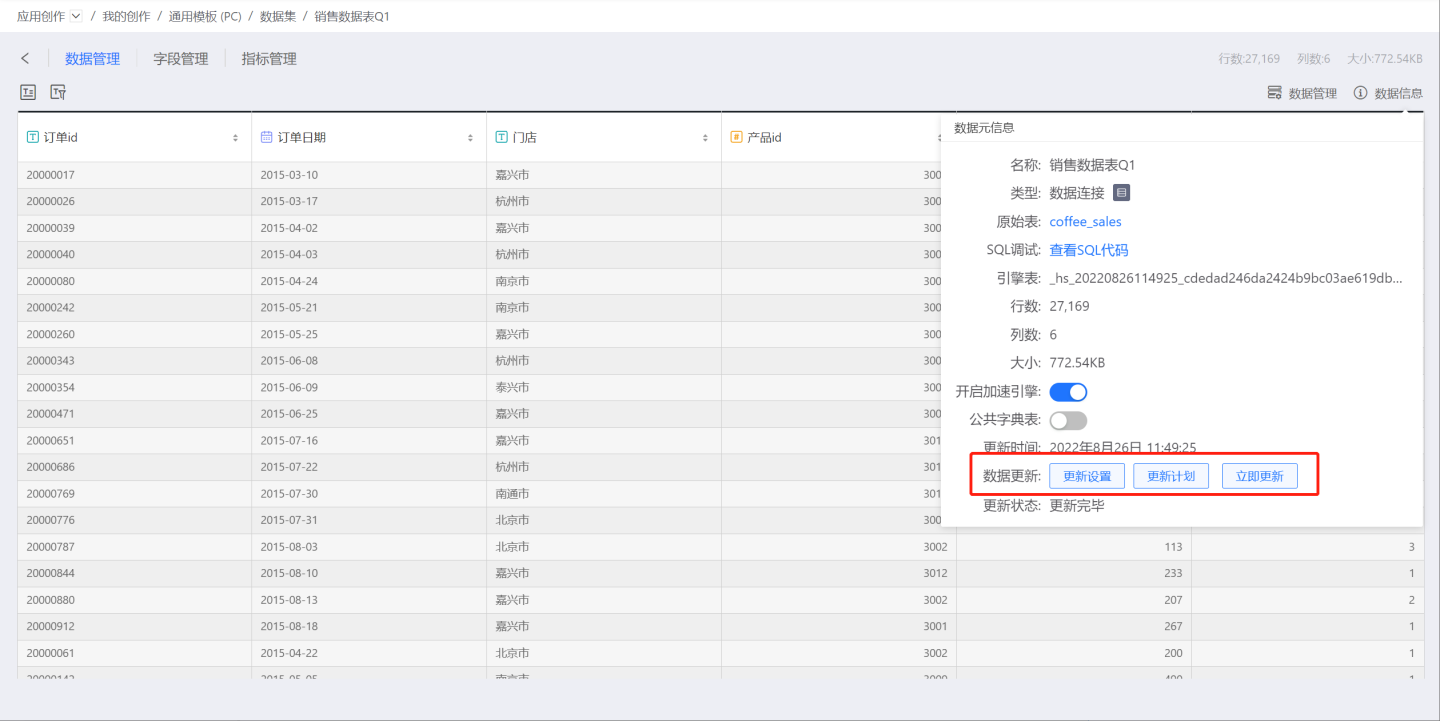

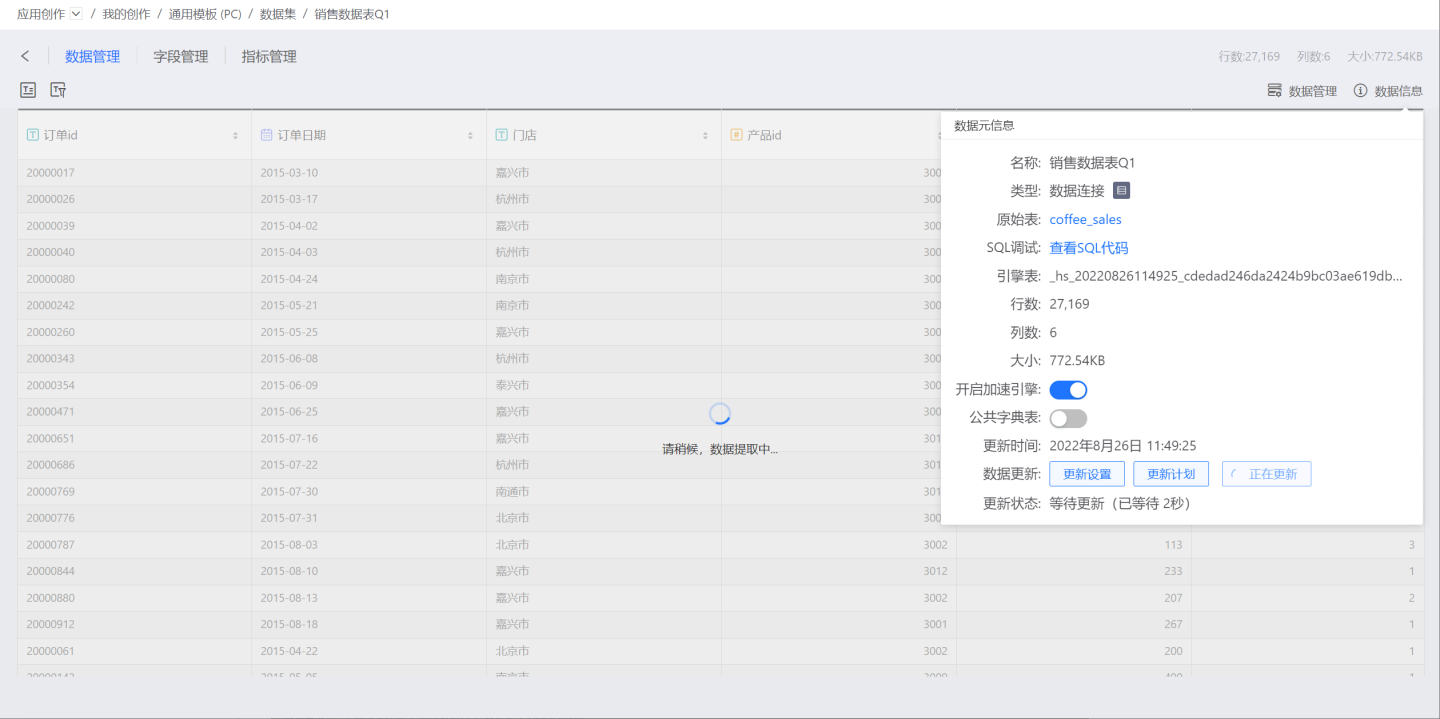

Immediate Update

Click Update Now, and the dataset starts updating. At this point, the update status is "Updating," and the time recorded after the update status indicates the duration of waiting for the update. After the update starts, the time spent on the update is counted as "Time Spent."

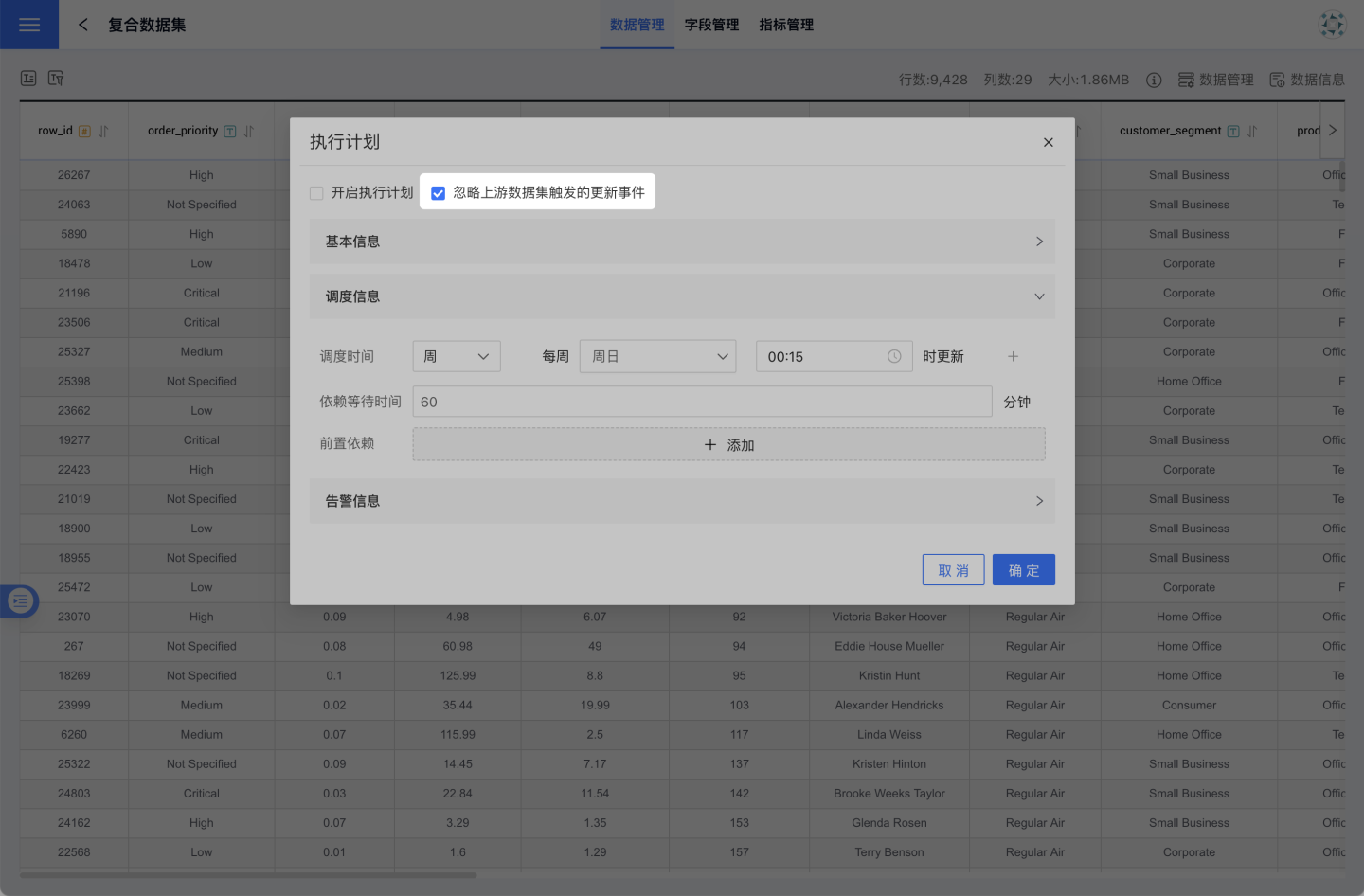

Update Plan

After setting the update schedule, the dataset synchronizes changes in the database table at the specified time. Click Update Schedule, and the execution plan settings are listed in the pop-up window. Check 'Enable Execution Plan', and add update schedules as needed, including basic information, scheduling information, and alert information.

- Basic Information: Set the number of retries upon execution and the priority of the task. Task priority is divided into high, medium, and low levels. High-priority tasks are processed first.

- Scheduling Information:

- Set the scheduling time for the task, with the option to set multiple scheduling times. Supports setting execution plans by hour, day, week, and month.

- Hour: Can set the minute of each hour for updates.

- Day: Can set a specific time of day for updates.

- Week: Can set a specific time of day for updates on certain days of the week, with multiple selections allowed.

- Month: Can set a specific time of day for updates on certain days of the month, with multiple selections allowed.

- Custom: Can set the update time points on your own.

- Set the pre-dependencies of the task, with the option to set multiple pre-dependency tasks.

- Set the dependency waiting time.

- Set the scheduling time for the task, with the option to set multiple scheduling times. Supports setting execution plans by hour, day, week, and month.

- Alert Information: Enable failure alerts. When a task fails to execute, an email notification will be sent to the recipient.

As shown in the figure above, set the update to occur every Monday at 0:15. Click "OK" to complete the update schedule setup.

As shown in the figure above, set the update to occur every Monday at 0:15. Click "OK" to complete the update schedule setup.

Update Time always displays the time of the most recent completed update.

Based on different demand scenarios, users can also choose to close the update plan in the update plan popup to coordinate and adjust data updates.

Users can also enter System Settings -> Task Management -> Dataset Update -> Modify Schedule to redefine the update schedule for a specific dataset, or directly enable or disable the update schedule in the dataset update list.

See Task Management.

Additionally, when updating composite datasets, upstream dependency trigger events can be ignored. For many users, composite datasets are a frequently used feature, such as federated datasets, merged datasets, aggregated datasets, etc. When importing composite datasets into the engine, one issue that sometimes arises is that the upstream datasets are also in the process of being imported into the engine, and they are updated frequently, which triggers the update of this composite dataset. Sometimes, we hope that the composite dataset has its own update schedule that is not triggered by upstream datasets, reducing the pressure of data synchronization and writing.

Tip

Updates for multi-table joint, data aggregation, and data merging datasets: When the dependent dataset is updated, it triggers the automatic update of this dataset.

Please note

When a dataset update fails, the update status shows as "Update Failed". Please click "Update Now" or contact the administrator.

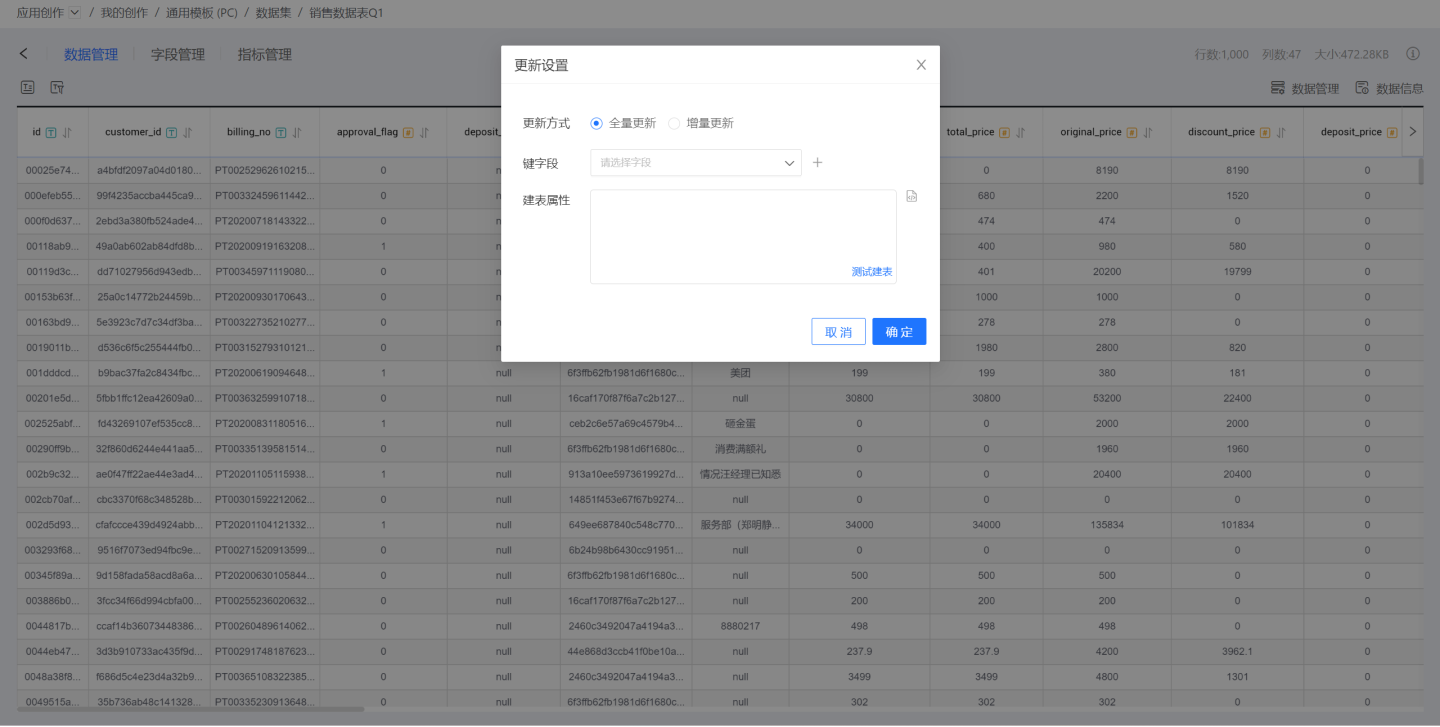

Update Settings

Click on the update settings to bring up the data update method. Users can choose between full update and incremental update based on their needs. Full update ensures data accuracy, while incremental update focuses on improving the efficiency of data updates.

- Update Methods

- Full Update refers to updating the entire content of a dataset. Full Update offers high accuracy and is suitable for datasets with small data volumes and low update frequencies.

- Incremental Update refers to updating part of the dataset's content. Users specify the incremental fields to determine which data content to update. This method is suitable for datasets with large data volumes and high update frequencies.

- Incremental Update is only applicable to datasets created via “SQL Query/Direct Connection”.

- It is recommended to choose numeric, date, or time fields as incremental fields for easier comparison to identify which data needs updating.

- Key Fields: Key fields are used as primary keys and distribution keys. Key fields have two functions.

- They serve as primary keys during incremental updates.

- They serve as primary keys and distribution keys during table creation. If table creation properties are set, they take precedence over the key fields, which will not take effect in this case.

- Table Creation Properties: Customize partition fields and index fields during the data synchronization table creation process to distribute data storage. Table creation properties only take effect during the first table creation. Currently, data sources that support table creation properties include Greenplum, Apache Doris, StarRocks, and ClickHouse.

Batch Data Update

When the amount of updated data is large, the update time may exceed the query time of the source database, causing the data update to fail. In this case, you can set the maximum limit for a single update by configuring the ETL_SRC_MYSQL_PAGE_SIZE option. When the limit is exceeded, the data is updated in batches. Batch data updates are applicable to incremental updates and full updates with key field settings. Currently, only MySQL data sources support batch data updates.

Tip

Please contact the technical staff to configure ETL_SRC_MYSQL_PAGE_SIZE.

Update Management

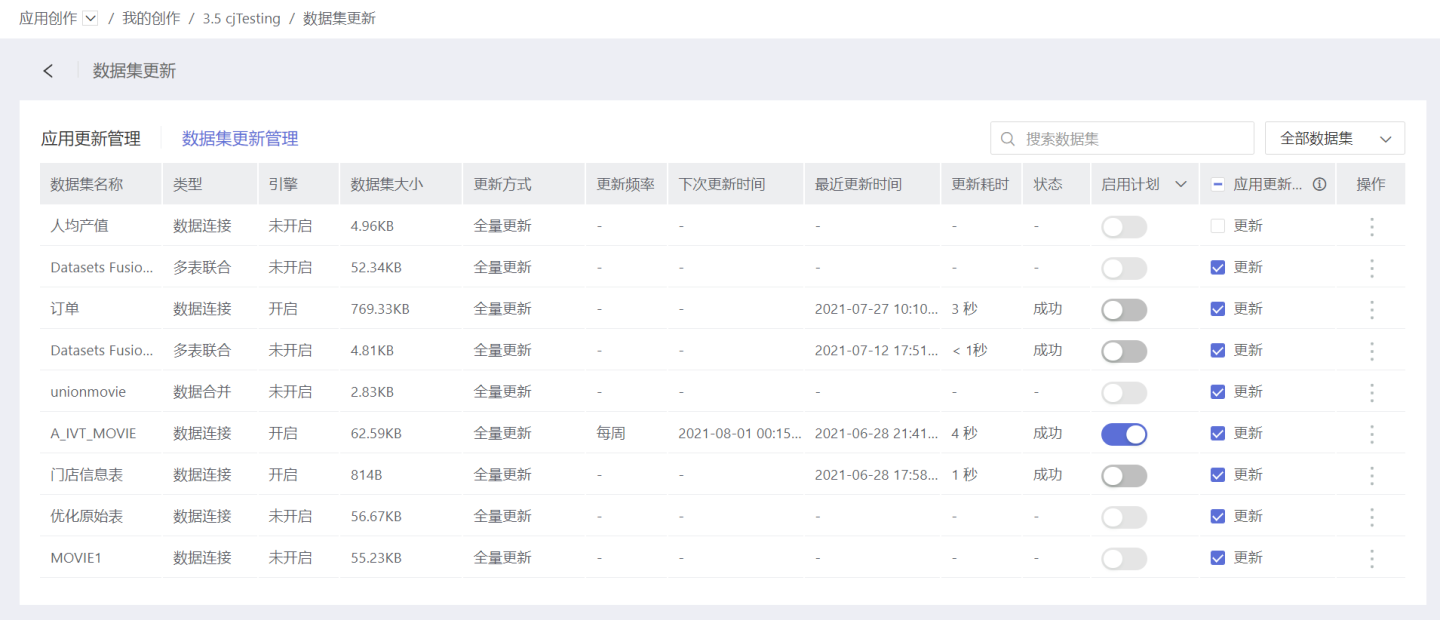

Dataset updates support app update management and dataset update management.

Application Update Management

Application update management is a unified update management for datasets within the application, setting update schedules. The specific steps are as follows.

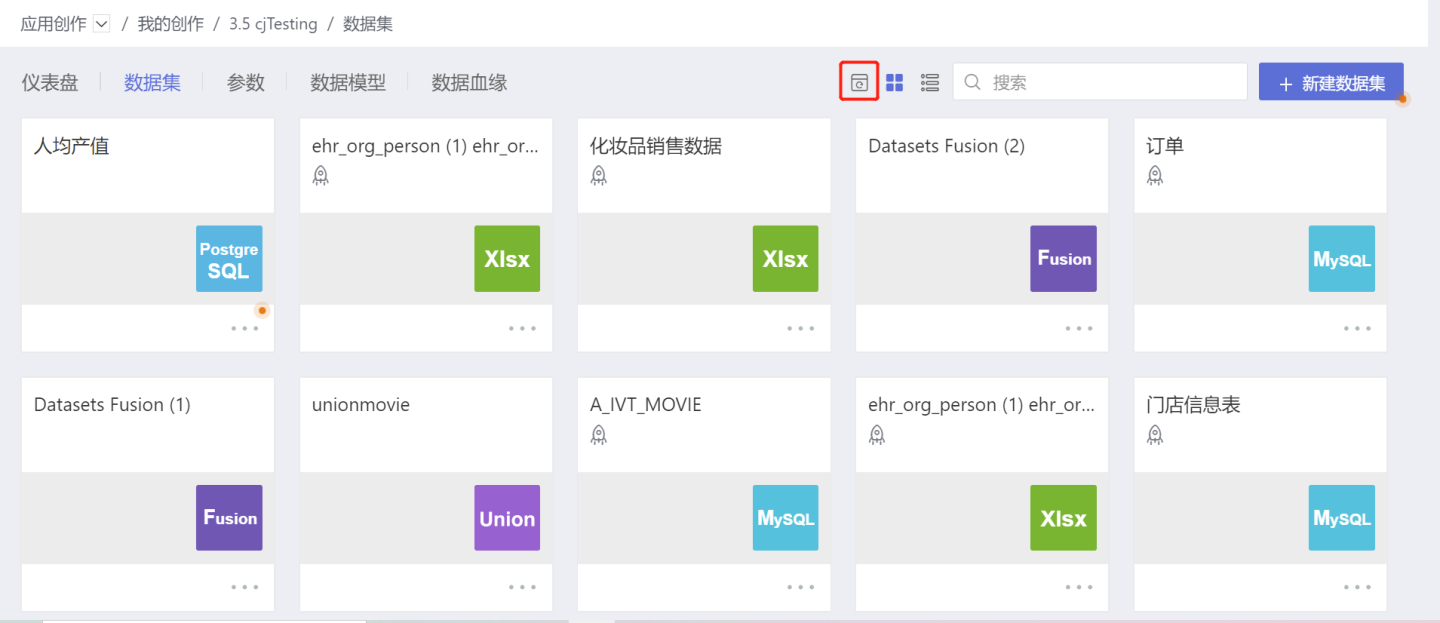

- Click the dataset update button in the red box in the dataset to enter the application update management page.

- The application update management page displays information such as the size of the application's dataset, the update frequency of the application dataset, update time, update status, enable schedule, and the number of datasets updated in the application.

- Click the dataset update management page, and in the Update when application updates column, check the datasets that need to be included in the overall update scope of the application.

- Return to the application update management page, and the Update datasets column will display the number of datasets selected for overall application update in step 3.

- Click the three-dot menu in the operations to set the update method.

- When selecting Immediate Update, the datasets within the application update scope will start the update operation immediately.

- When selecting Update Schedule, the datasets within the application update scope will be updated according to the agreed schedule.

- Click the update history in the three-dot menu to view the dataset update history.

Dataset Update Management

The dataset update management page displays the update settings information for a single dataset within the application. Here, you can perform immediate updates to the dataset, set update schedules, view update records, check update status, confirm the next update time, configure update settings, and control whether the dataset updates along with the application.

Dataset Loading Failed

In cases where the data connection is unavailable, issues such as dataset loading failure may occur. Hovering the cursor over the loading failure prompt will display the reason for the failure.