Dataset Update

When the table definition in the database changes, it is necessary to update the dataset configuration in the HENGSHI system to correctly and efficiently access the database table. The Update Schema feature is required for this purpose.

When the dataset is imported into the HENGSHI engine and the data in the table changes in the database, the data also needs to be updated in the HENGSHI engine to ensure the timeliness of data analysis. The Update Data feature is required for this purpose.

Update Schema

When the table definitions in the database undergo the following types of changes, the Update Schema feature is required to modify the field configuration information recorded in the dataset.

Column Type Changes

When the field type of a table in the database changes, afterUpdate Schema, the original field type recorded by the system will change accordingly. If the current type can be converted to the old type, the usage of the field will not be affected, and the HENGSHI system will automatically complete the field type conversion. If the current type is incompatible with the old type, an error will occur when accessing the dataset, and the user will need to modify the field type in the HENGSHI system to a compatible type.Column Name Changes

When the field name of a table in the database changes, afterUpdate Schema, the system will delete the field with the old name and add the field with the new name. If the old field is not referenced, this operation has no impact. If the old field is referenced, the HENGSHI system will prompt "Field name *** does not exist" in the places where it is used, requiring the user to manually correct the usage of the old field.Column Comment Changes

When the field comment of a table in the database changes, afterUpdate Schema, the original field description recorded by the system will change accordingly.Adding Columns

When new fields are added to a table in the database, to view the newly added fields in the HENGSHI system, the data must be updated. After the update, the newly added fields will not appear on the data management page but will be listed in the "Hidden Fields" group in field management. Users can customize whether to display these fields.Deleting Columns

When the field name of a table in the database changes, afterUpdate Schema, the system will delete the field with the old name. If the old field is not referenced, this operation has no impact. If the old field is referenced, the HENGSHI system will prompt "Field name *** does not exist" in the places where it is used, requiring the user to manually correct the usage of the old field.

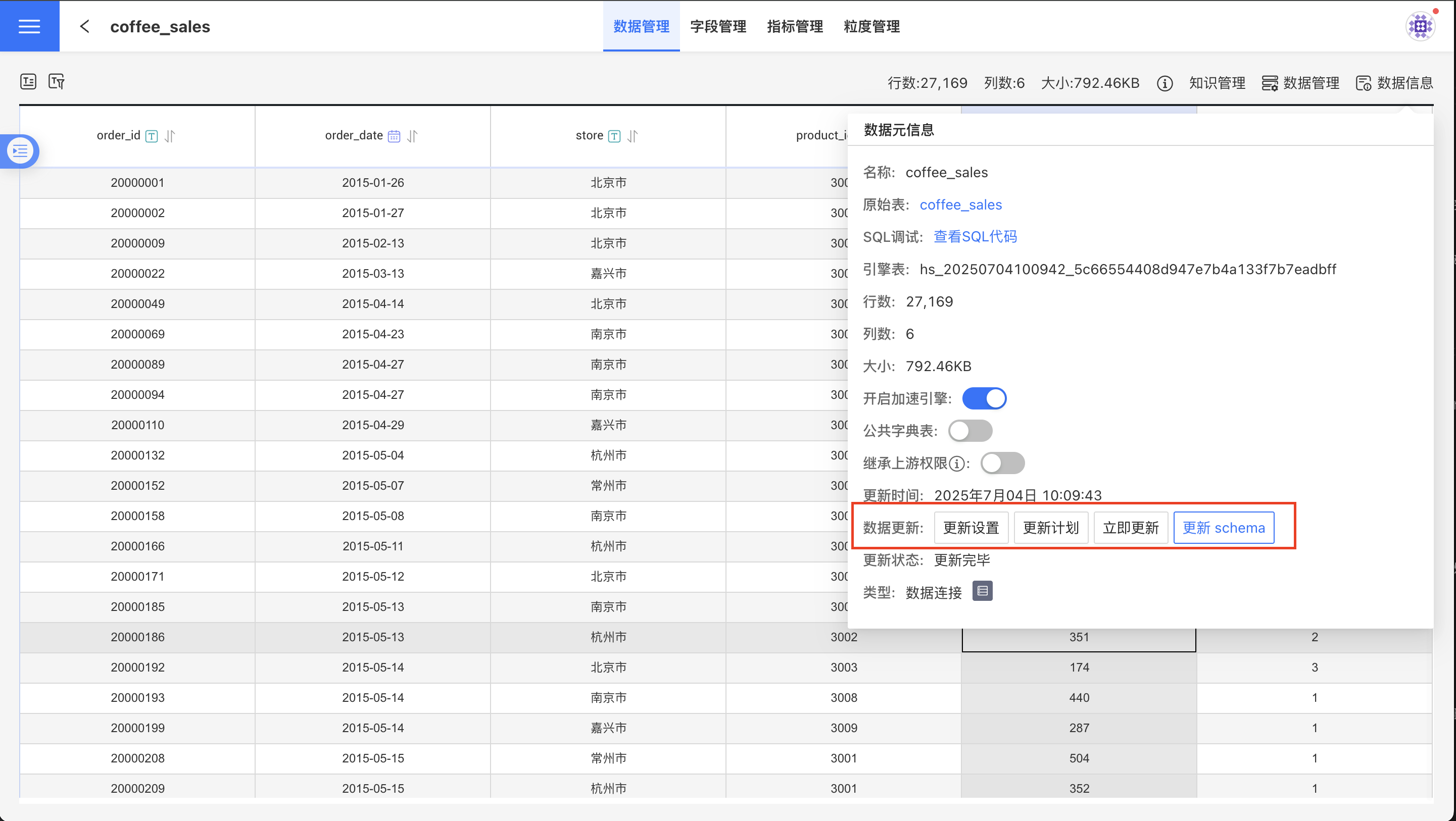

Methods to Update Schema

There are two ways to update the schema:

- Use the

Update Schemabutton in theData Informationsection of the dataset page. - Use the

Edit Datasetfeature.

Data Update

When row data in the database is updated, if the dataset has enabled the Import Engine, the Data Update feature needs to be used to ensure data timeliness. If the dataset does not have the Import Engine enabled, the Data Update feature is not required. Data update involves the functionalities of Immediate Update, Update Schedule, and Update Settings in the Data Information section of the dataset page.

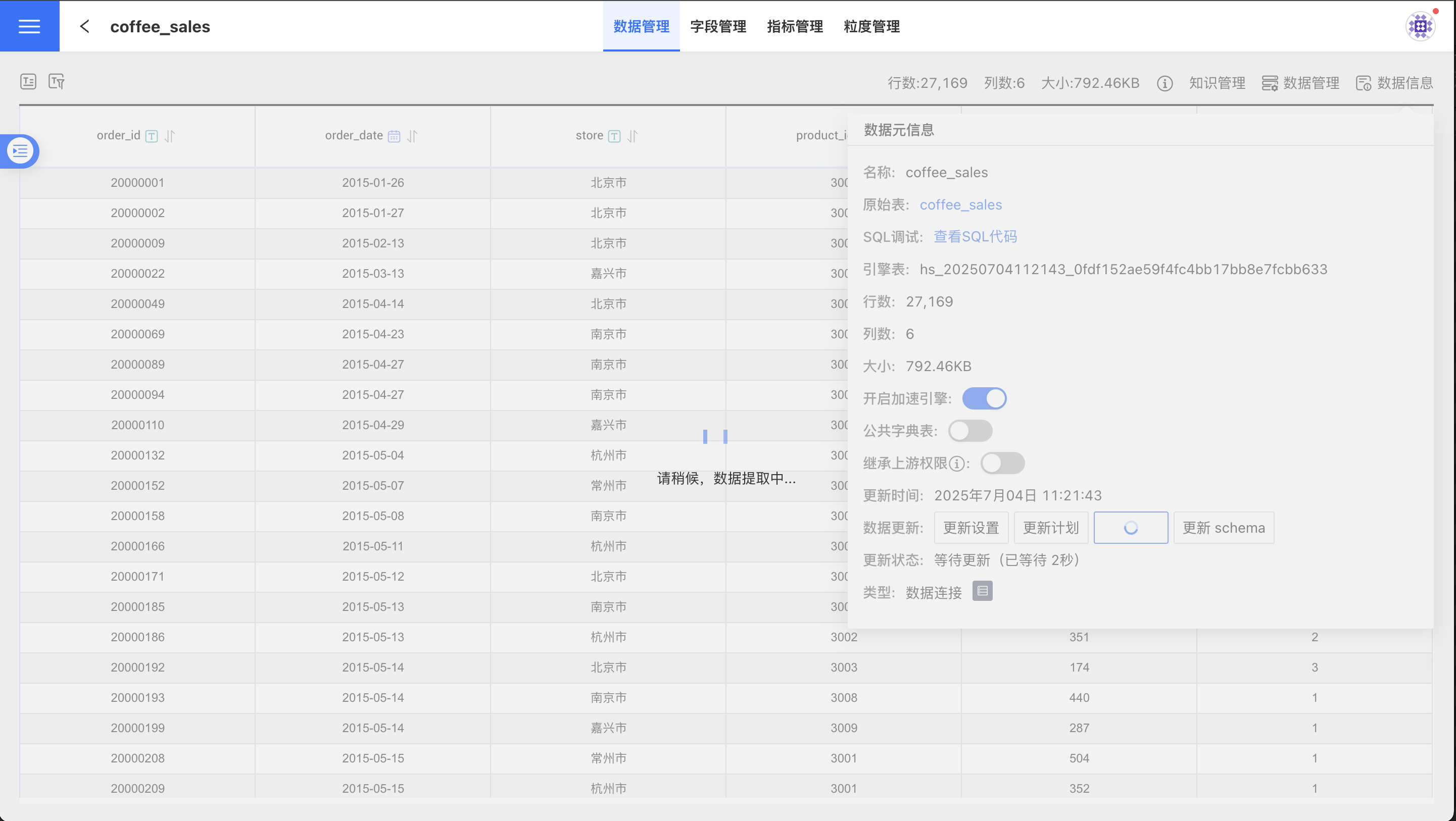

Update Now

Click Update Now to start updating the dataset. At this point, the update status will display as "Updating." The time after the update status records the duration of the wait for the update, and once the update begins, it tracks the "Time Spent" on the update.

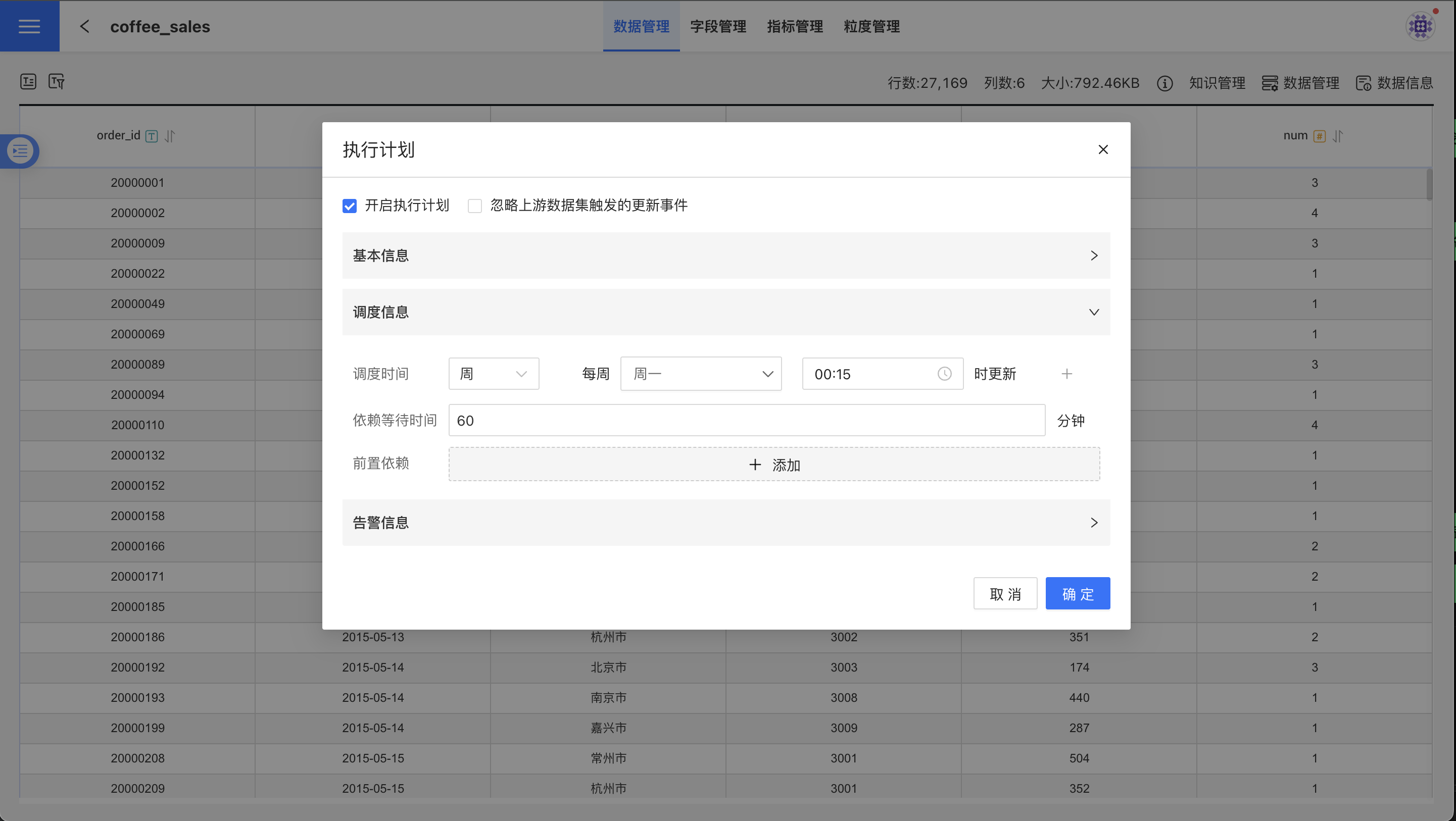

Update Plan

After setting the update plan, the dataset will synchronize changes in the database table at the specified time points. Click Update Plan, and a pop-up window will display the execution plan settings. Check 'Enable Execution Plan' and add update plans as needed, including basic information, scheduling information, and alert information. Once the update is completed, the Update Time will always show the most recent update completion time.

- Enable Execution Plan: Check to enable; uncheck to disable the execution plan.

- Ignore Update Events Triggered by Upstream Datasets: This applies only to composite datasets, such as multi-table union datasets, merged datasets, aggregated datasets, row-to-column datasets, column-to-row datasets, etc. Checking this option means that when the upstream dataset is refreshed, the refresh of this dataset will also be triggered. Unchecking means that when the upstream dataset is refreshed, this dataset will not be refreshed and will follow its own execution plan.

- Basic Information: Set the retry count and task priority during execution. Task priority is divided into three levels: high, medium, and low. High-priority tasks are processed first.

- Scheduling Information:

- Set the task scheduling time, and multiple scheduling times can be configured. Supports setting execution plans by hour, day, week, or month.

- Hour: You can set the specific minute of each hour for updates.

- Day: You can set specific time points for daily updates.

- Week: You can set specific time points on specific days of the week, with multiple selections allowed.

- Month: You can set specific time points on specific days of the month, with multiple selections allowed.

- Custom: You can customize the update time points.

- Set task dependencies, and multiple dependency tasks can be configured.

- Set dependency wait time.

- Set the task scheduling time, and multiple scheduling times can be configured. Supports setting execution plans by hour, day, week, or month.

- Alert Information: Enable failure alerts. When a task fails to execute, an email notification will be sent to the recipient.

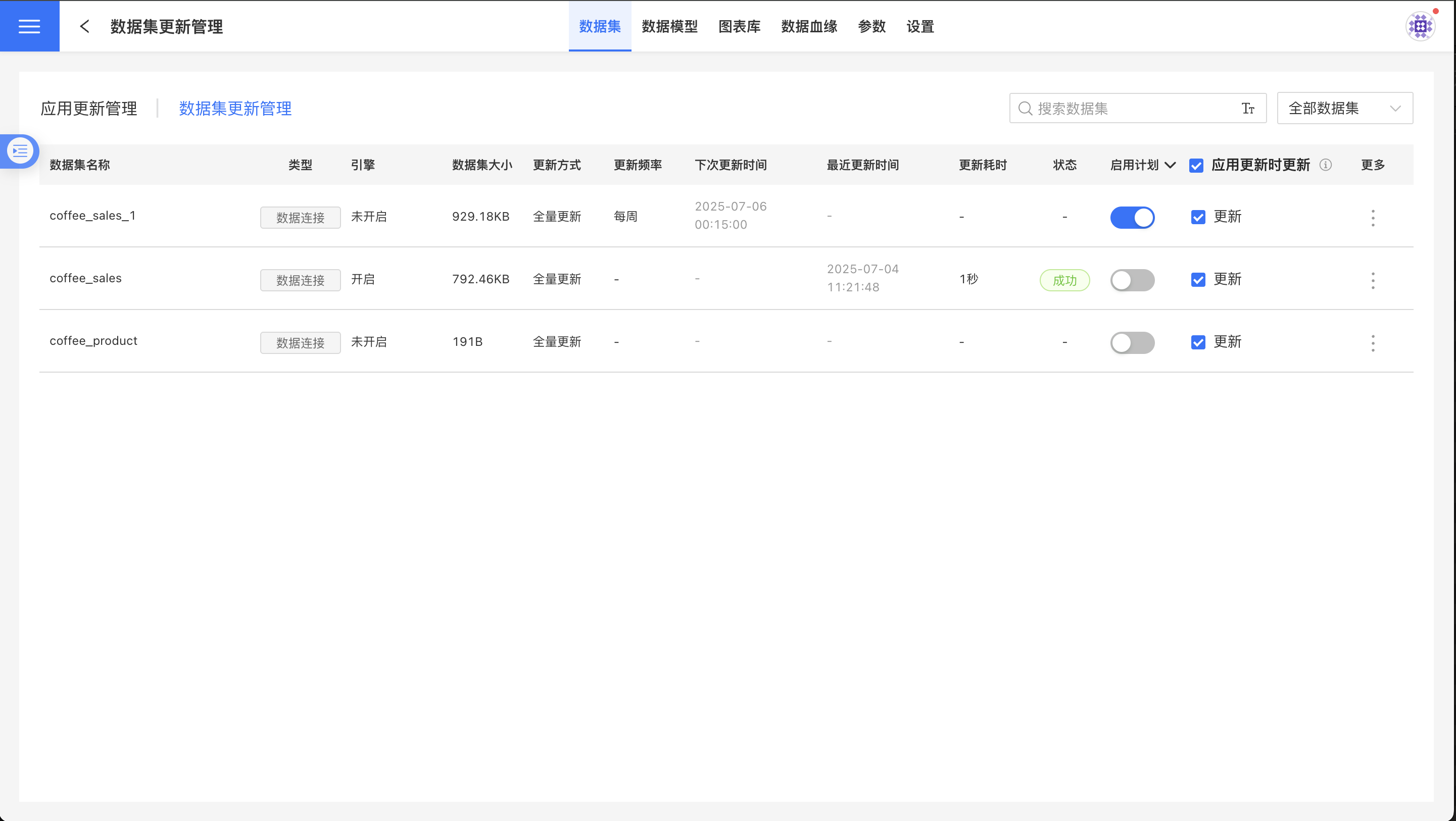

As shown in the figure above, set the update to occur every Monday at 0:15. Click "Confirm" to complete the update plan setup.

As shown in the figure above, set the update to occur every Monday at 0:15. Click "Confirm" to complete the update plan setup.

Tip

System administrators can go to System Settings -> Task Management -> Dataset Update -> Modify Plan to redefine the update plan for a specific dataset. See Task Management for details.

Caution

When a dataset update fails, the update status will display as "Update Failed." Please click "Update Now" or contact the administrator.

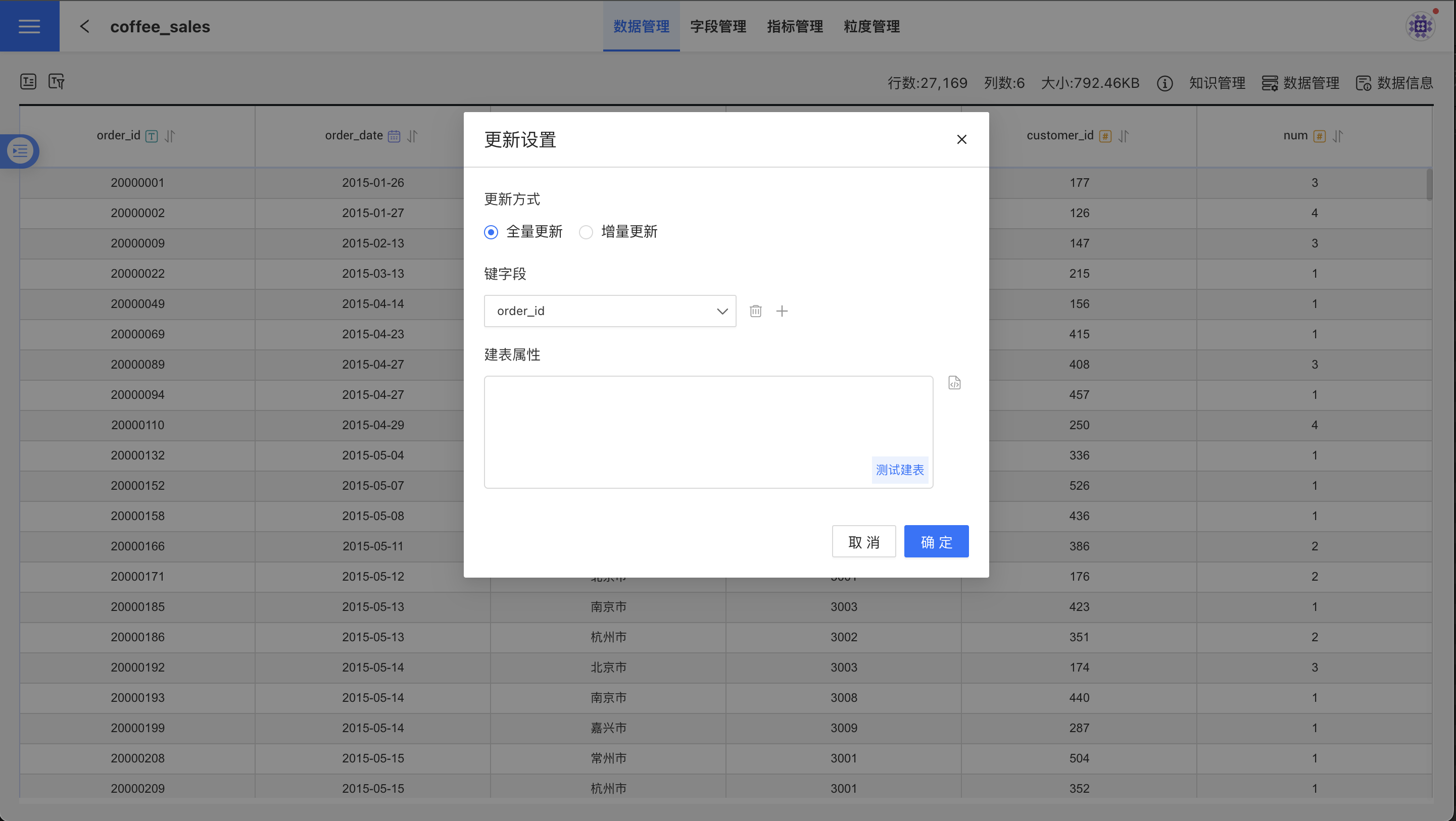

Update Settings

Click on Update Settings to open the data update method options. Users can choose between full updates or incremental updates based on their needs. Full updates ensure data accuracy, while incremental updates focus on improving update efficiency.

- Update Methods

- Full updates refer to updating the entire dataset. Full updates are highly accurate and suitable for datasets with small volumes and low update frequencies.

- Incremental updates refer to updating only part of the dataset. Users can specify incremental fields to determine which data content to update. This method is suitable for datasets with large volumes and high update frequencies.

- Incremental updates are only applicable to datasets created via “SQL Query/Direct Connection”.

- It is recommended to select numeric, date, or time fields as incremental fields for easy comparison to confirm which data needs updating.

- Key Fields: Key fields are used as primary keys and distribution keys. Key fields serve two purposes:

- They act as primary keys during incremental updates.

- They serve as primary keys and distribution keys during table creation. If table creation attributes are set during table creation, the configuration of table creation attributes takes precedence, and key fields will not take effect in this case.

- Table Creation Attributes: During the data synchronization table creation process, users can customize partition fields and index fields to distribute data storage. Table creation attributes only take effect during the first table creation. Currently, supported data sources for table creation attributes include Greenplum, Apache Doris, StarRocks, and ClickHouse.

Batch Data Update

When the amount of data to be updated is relatively large, the update time may exceed the query time of the source database, resulting in data update failure. In this case, you can configure the ETL_SRC_MYSQL_PAGE_SIZE option to set the maximum limit for data updates at one time. If the limit is exceeded, the data will be updated in batches. Batch data updates are applicable to incremental updates and full updates with key fields set. Currently, only MySQL data sources support batch data updates.

Tip

Please contact technical personnel to configure ETL_SRC_MYSQL_PAGE_SIZE.

Update Management

Dataset updates support App update management and Dataset update management.

Application Update Management

Application update management is used to uniformly manage the updates of datasets within an application and set update schedules. The specific steps are as follows:

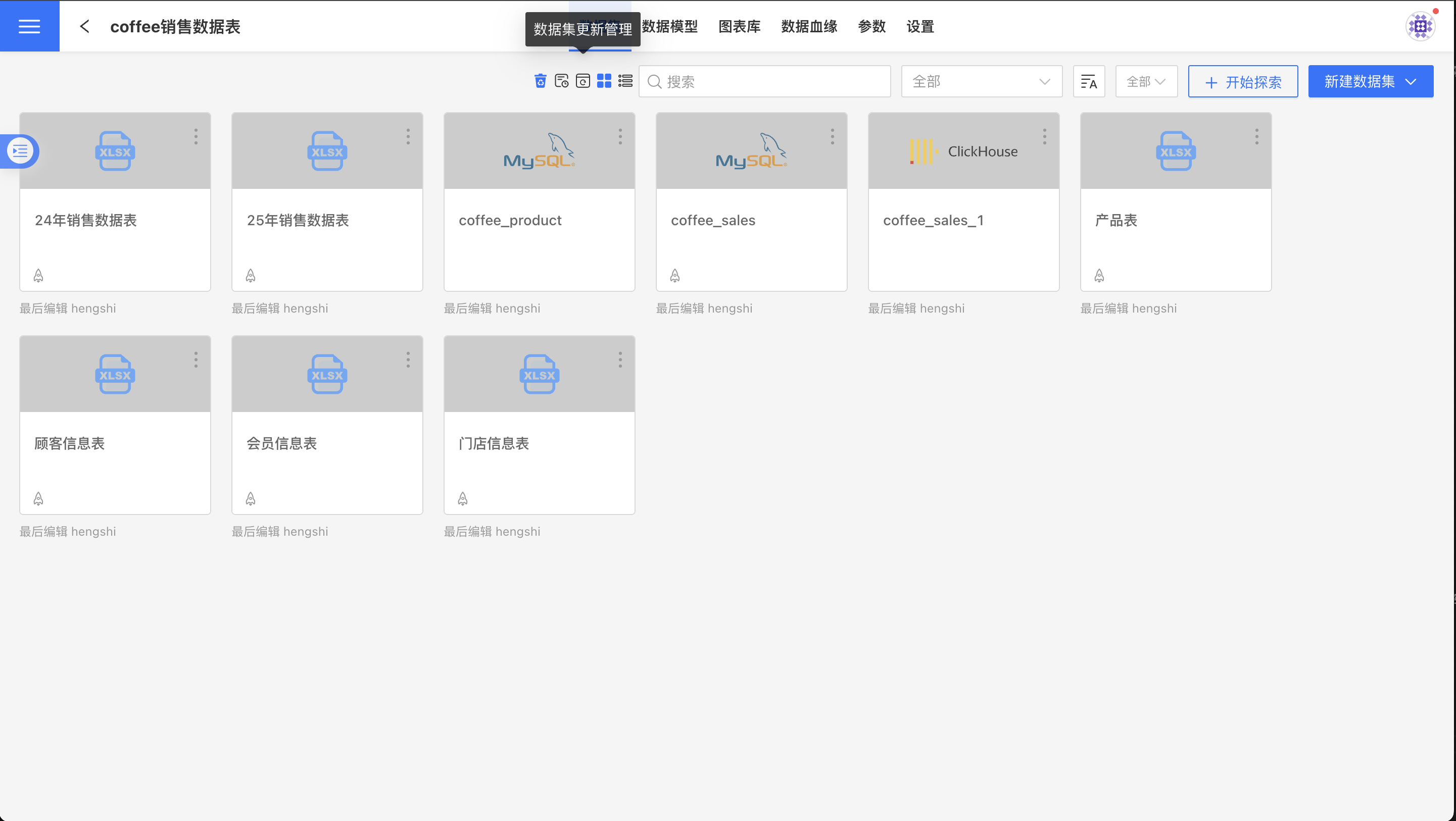

- On the dataset list page, click the

Dataset Updatebutton to enter the application update management page.

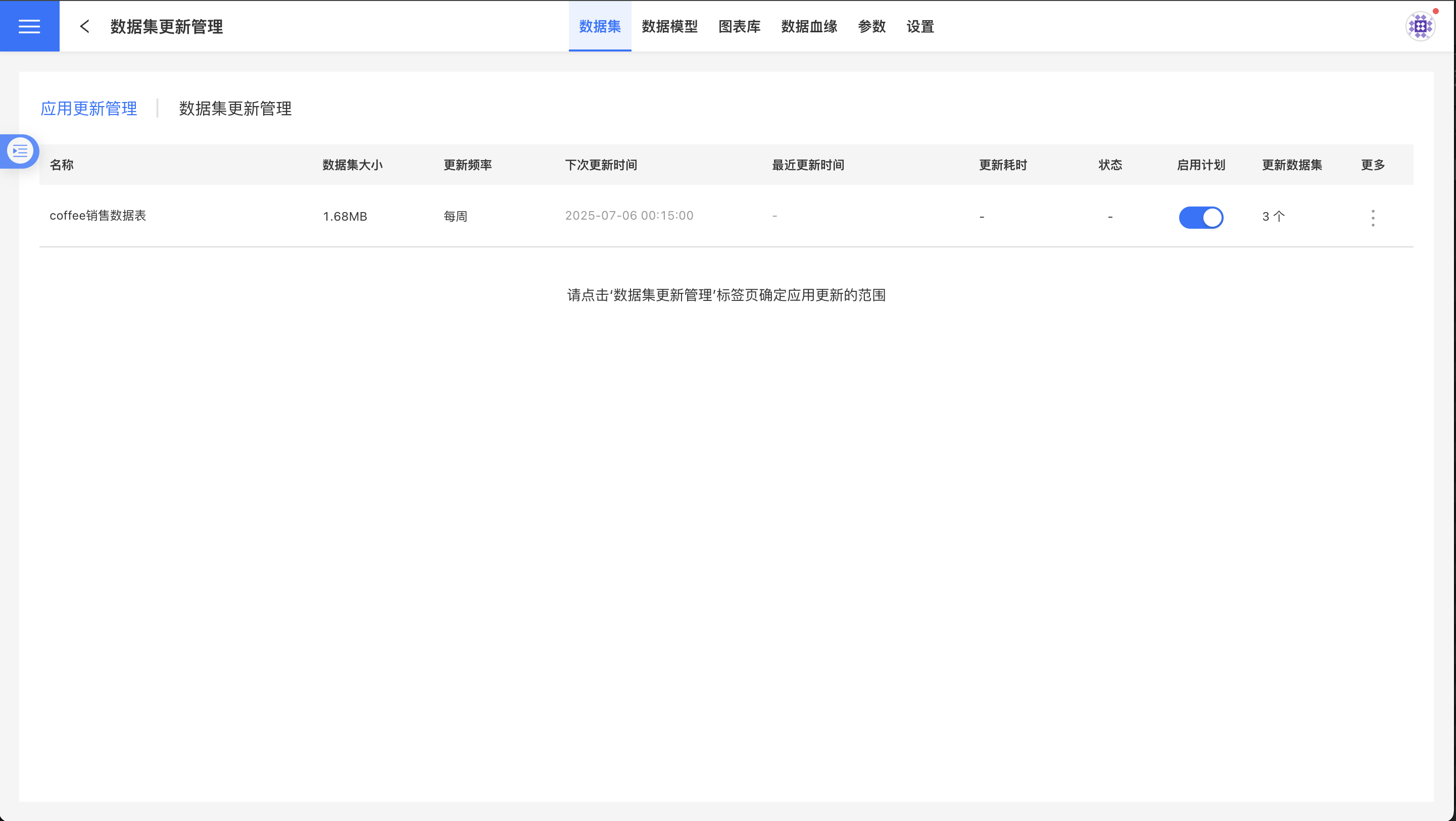

- The application update management page displays information such as the size of the application's datasets, update frequency, update time, update status, enabled schedules, and the number of datasets included in the application update.

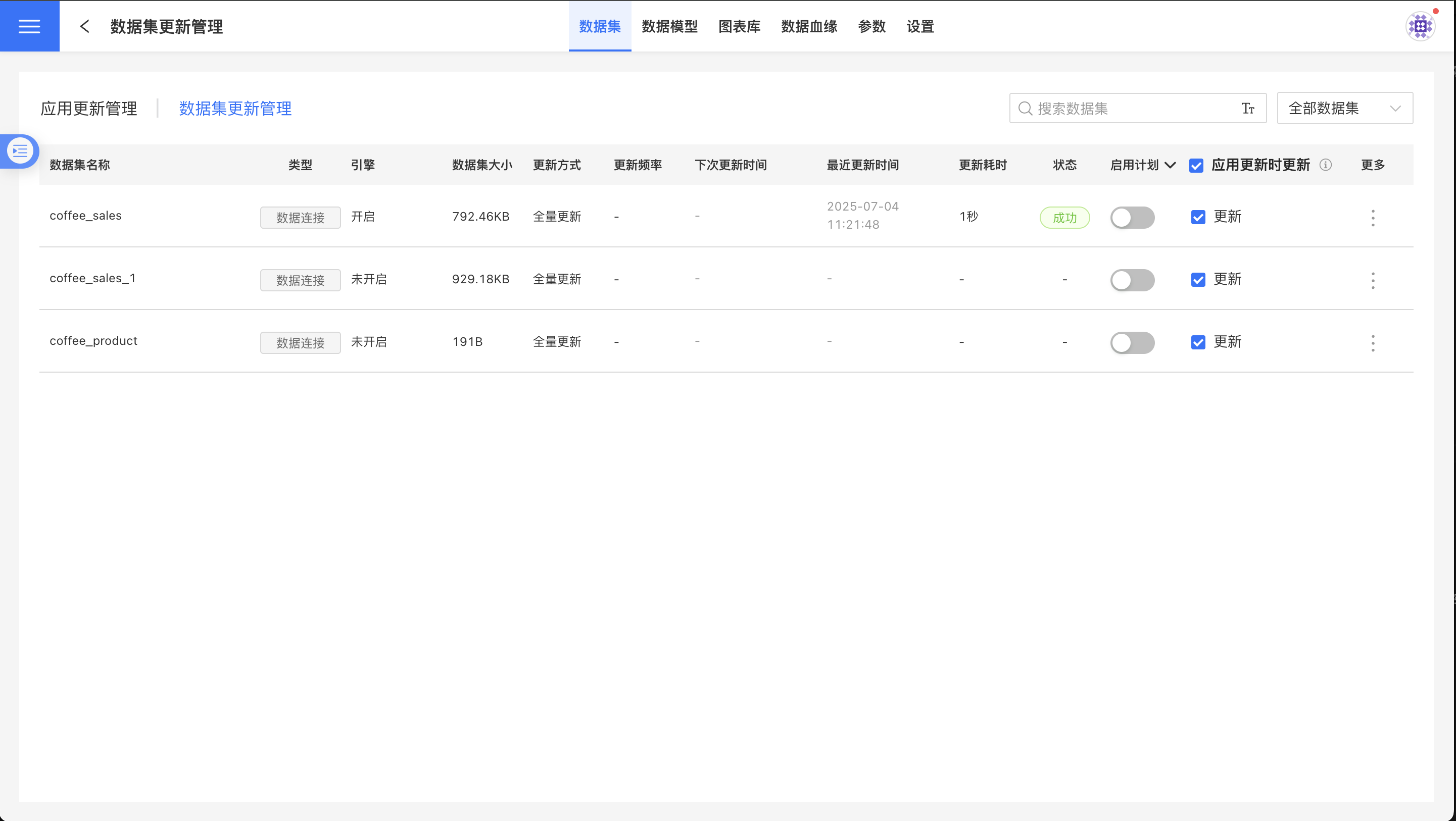

- On the dataset update management page, check the datasets to be included in the overall application update scope under the Update During Application Update section.

- Return to the application update management page. The Updated Datasets section displays the number of datasets selected in step 3 for the overall application update.

- Click the three-dot menu in the operations section to set the update method.

- When selecting Update Immediately, the datasets within the application update scope will start updating immediately.

- When selecting Update Schedule, the datasets within the application update scope will be updated according to the agreed schedule.

- Click the update history option in the three-dot menu to view the dataset update history.

Dataset Update Management

The dataset update management page displays the update settings information for a single dataset within the app. Here, you can perform immediate updates, set update schedules, view update logs, check update statuses, confirm the next update time, configure update settings, and control whether the dataset updates along with the app.

Dataset Loading Failed

In cases where the data connection is unavailable, issues with dataset loading may occur. Hovering the cursor over the loading failure message will display the reason for the failure.