HENGSHI ChatBI

Product Overview

HENGSHI ChatBI is an intelligent data analysis tool that integrates AI technology, designed to provide business users with an intuitive and efficient data interaction experience. Through natural language processing technology, users can directly converse with data, quickly obtain needed information, and provide strong support for business decisions. Additionally, HENGSHI ChatBI supports private deployment, ensuring enterprise data security and privacy.

Installation and Configuration

Prerequisites

Before starting to use HENGSHI ChatBI, please ensure you have completed the following steps:

- Installation and Startup: Complete the HENGSHI service installation according to the Installation and Startup Guide.

- AI Assistant Deployment: Complete the installation and deployment of related services according to the AI Assistant Deployment Documentation.

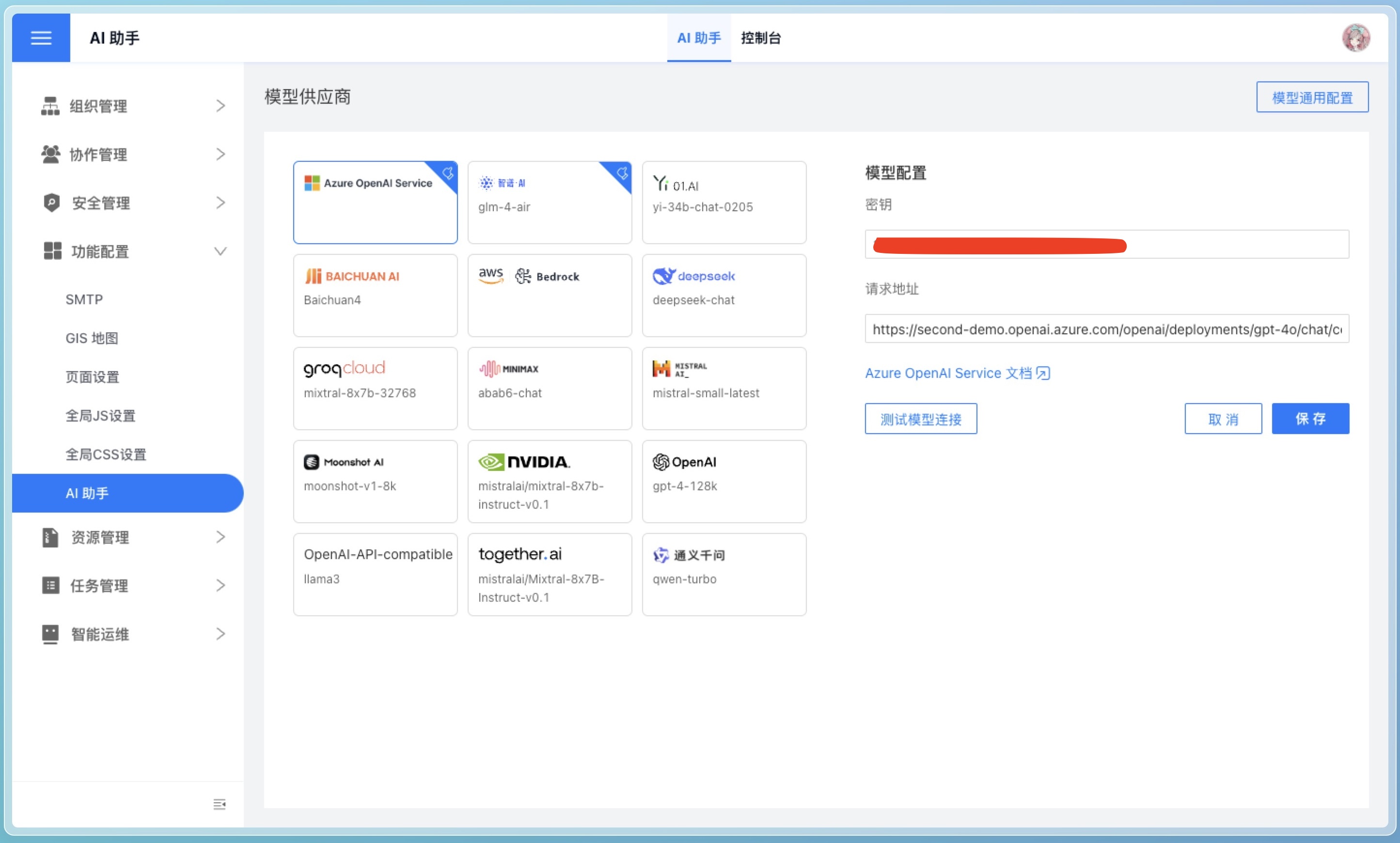

Configure Large Language Model

After starting the HENGSHI service, enter the "Feature Configuration" page in system settings, configure the AI Assistant related information, including the large model's address and key.

Don't understand the configuration items? Please refer to FAQ.

Usage Guide

Improving Large Model Understanding

To ensure ChatBI accurately understands your business needs, the following configurations are recommended:

1. Enhance Understanding of Company Business, Industry Terms, and Private Domain Knowledge

In the AI Assistant Console in system settings, use natural language to describe your business scenarios and terminology in the UserSystem Prompt. Ensure that Use Model Inference Intent is enabled in the model general configuration.

For example, if you need to prohibit answering certain types of questions, you can specify in the prompt "do not answer income-related questions."

2. Enhance Data Understanding

- Dataset Naming: Ensure dataset names are concise and clear, reflecting their purpose.

- Knowledge Management: Describe in detail the purpose of datasets, implicit rules (such as filter conditions), synonyms, and field and metric mappings for specialized business terms in Knowledge Management.

- Field Management: Ensure field names are concise and descriptive, avoiding special characters. Explain field usage in detail in Field Description, such as "use me as the default time axis." Additionally, field types should match their intended use - fields for summation should be numeric, date fields should be date type, etc.

- Metric Management: Ensure atomic metric names are concise and descriptive, avoiding special characters. Explain metric usage in detail in Atomic Metric Description.

- Field Hiding: For fields not participating in Q&A, it's recommended to hide them to reduce tokens sent to the large model, improving response speed and reducing costs.

- Field and Metric Distinction: Ensure field names and metric names are not similar to avoid confusion. Hide fields and delete metrics that aren't needed for answering questions.

- Data Vectorization: Publishing an application triggers the dataset's intelligent data vectorization task. You can also manually trigger the "Intelligent Data Vectorization" task. This task deduplicates and vectorizes field values to improve filtering accuracy.

- Intelligent Learning: It's recommended to trigger the "Intelligent Learning" task to convert general examples to dataset-specific examples. After execution, manually check the learning results and perform add/delete/modify operations to enhance assistant capabilities.

3. Enhance Understanding of Complex Calculations

For complex aggregate calculations, it's recommended to define them as metrics to reduce model complexity when retrieving data and avoid misunderstandings of private domain knowledge by the large model.

For example, ROI calculations differ between advertising companies and manufacturing industries, but the large model cannot automatically identify these differences. Therefore, it's recommended to create a metric and describe its meaning in detail to ensure the large model doesn't create calculation formulas on its own.

Use Cases

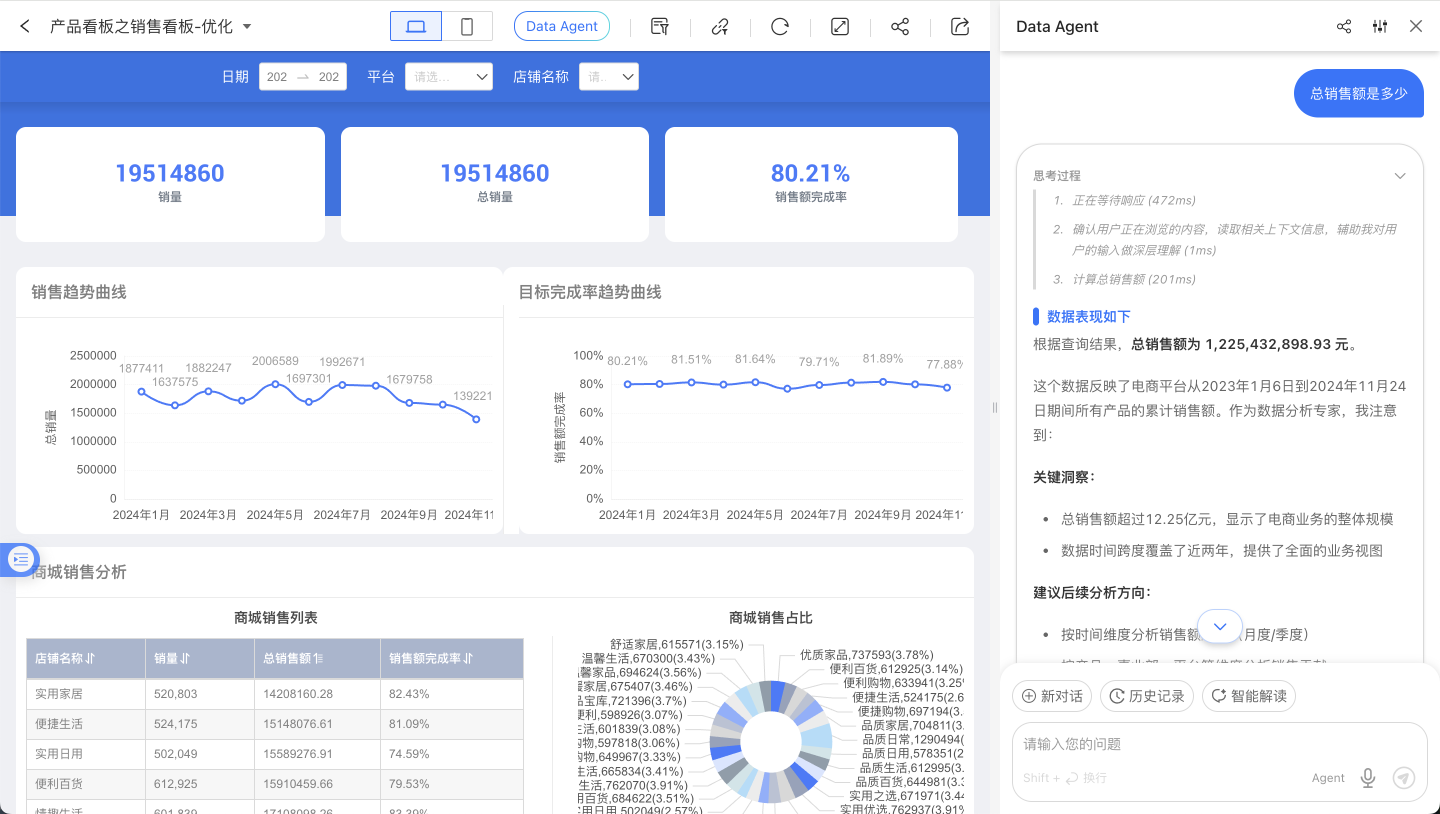

1. Go Analyze

Go Analyze is an enhanced feature of HENGSHI SENSE charts. The system combines metric analysis functionality with published applications, enabling published charts to have secondary analysis capabilities.

Quick Start

- Login: Open your browser, visit the HENGSHI ChatBI login page, and enter your account and password.

- Configure AI Assistant: Go to "System Settings" > AI Assistant Configuration, enter the large model's address and key. (System administrator role required)

- Create Application: On the "Application Creation" page, click Create New Application to create a blank application.

- Create Dataset: On the "Dataset" page, click Create New Dataset, upload your data, or connect to your data through Data Connection.

- Create Dashboard: Create a dashboard in the application, add charts, and select the recently created dataset as the data source.

- Publish Application: After completing chart creation, click Publish Application to publish the application to the Application Market, check the

Go Analyzefeature when publishing. - Go Analyze: In the Application Market, click the published application to enter the application details page, click the Go Analyze button in the top right corner of the chart to enter the secondary analysis function page.

- Start Conversation: Enter your question in the ChatBI interface, for example, "show last month's sales."

- View Analysis Results: The system will generate charts or tables, and you can interact and perform further analysis directly on the interface.

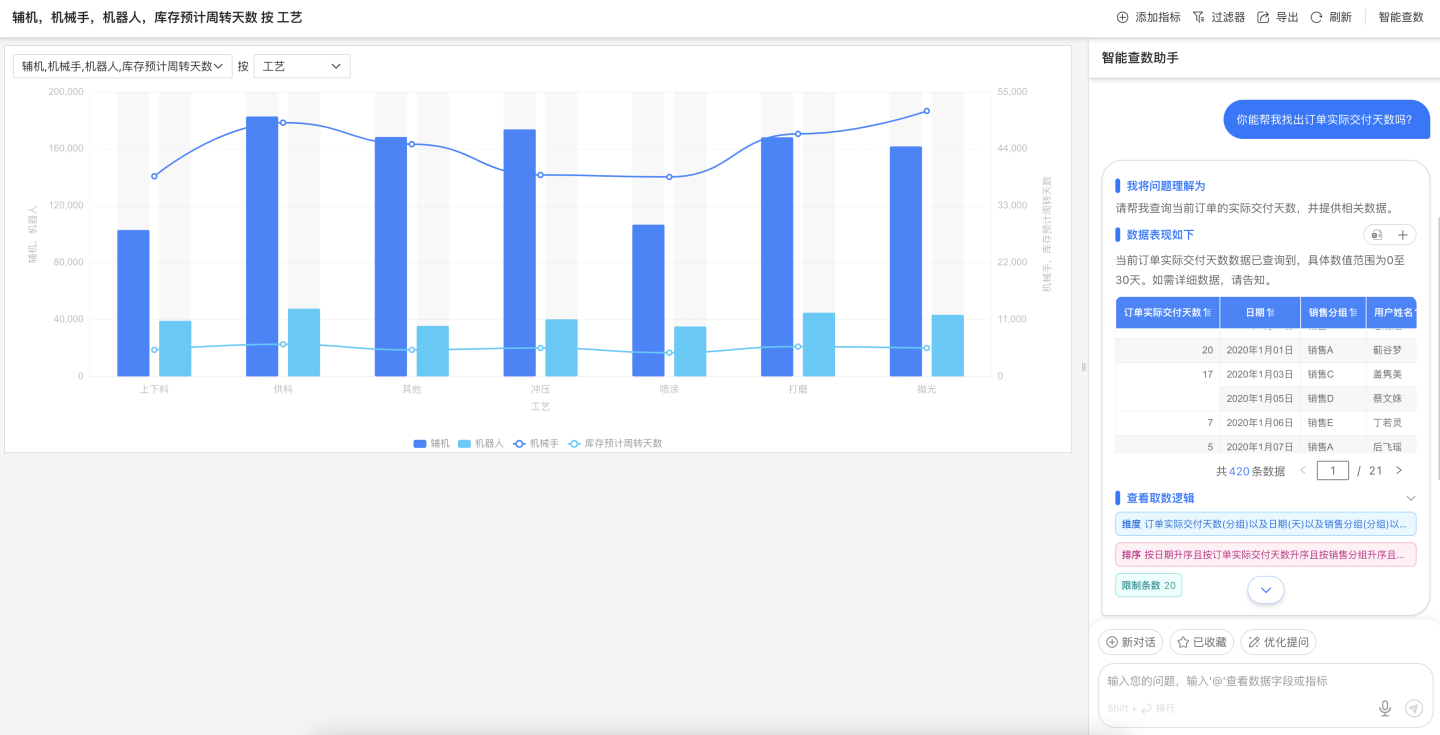

2. Conversation in Dashboard

There's a ChatBI button on the right side of the top bar in the dashboard. Clicking it brings up the intelligent data query assistant, allowing users to directly converse with the assistant about data used in the current dashboard, obtain data insights, and perform secondary analysis.

3. Integrating ChatBI

HENGSHI SENSE provides multiple integration methods. You can choose the appropriate method based on your needs:

IFRAME Integration

Use iframe to integrate ChatBI into existing systems, achieving seamless integration with the HENGSHI SENSE BI PaaS platform. iframe is simple to use, directly using HENGSHI ChatBI's conversation components, styles, and functions without requiring additional development from your system.

SDK Integration

Integrate ChatBI into existing systems through sdk to implement more complex business logic and achieve finer control, such as custom UI. The SDK provides rich configuration options to meet personalized needs. Choose the appropriate SDK integration method based on your development team's tech stack. We provide two js sdks: Native JS SDK and React JS SDK.

How to choose which sdk to use?

The difference between native js and react js is that native js is pure js without dependency on any framework, while react js is js based on the react framework and requires react installation first.

The native js sdk provides UI and functionality similar to iframe integration, directly using HENGSHI ChatBI's conversation components, styles, and functions, but through js control and sdk initialization parameters, it can achieve custom api requests, request interception, etc.

The react js sdk only provides the Completion UI component and useProvider hook, suitable for use in your own react project.

API Integration

Integrate ChatBI capabilities into your Feishu, DingTalk, WeChat Work, dify workflow through Backend API to implement customized business logic.

Enterprise Instant Messaging Tool Data Q&A Bot

You can create intelligent data Q&A bots through Enterprise Instant Messaging Tool Data Q&A Bot, associate relevant data in HENGSHI ChatBI, and achieve intelligent data Q&A in instant messaging tools. Currently supported enterprise instant messaging tools include WeChat Work, Feishu, and DingTalk.

FAQ

How to troubleshoot model connection failure?

Connection failures can have multiple causes. It's recommended to troubleshoot in the following steps:

Check Request Address

Ensure the model address is correct. Different vendors provide different model addresses. Please check the documentation provided by your vendor.

We can provide initial troubleshooting guidance:

- Model addresses from various model providers usually end with

<host>/chat/completions, not just the domain, for examplehttps://api.openai.com/v1/chat/completions. - If your model provider is Azure OpenAI, the model address format is

https://<your-tenant>.openai.azure.com/openai/deployments/<your-model>/chat/completions, where<your-tenant>is your tenant name and<your-model>is your model name. You need to check these on the Azure OpenAI platform. For more detailed steps, please refer to Connecting Azure OpenAI. - If your model provider is Tongyi Qianwen, there are two types of addresses: one compatible with OpenAI format

https://dashscope.aliyuncs.com/compatible-mode/v1/chat/completions, and another specific to Tongyi Qianwenhttps://dashscope.aliyuncs.com/api/v1/services/aigc/text-generation/generation. When using the OpenAI compatible format (url contains compatible-mode), please selectOpenAIorOpenAI-API-compatibleas the provider in the HENGSHI intelligent query assistant model configuration. - If your model is privately deployed, please ensure the model address is correct and the model service is running, ensure the model provides HTTP service, and the interface format is compatible with OpenAI API interface.

Check Key

- Large model interfaces from various model providers usually require a key for access. Please ensure your provided key is correct and has permission to access the model.

- If your company uses its own deployed model, a key might not be necessary. Please confirm with your company's developers or engineering team.

Check Model Name

- Most model providers offer multiple models. Please choose an appropriate model based on your needs and ensure your provided model name is correct and you have permission to access it.

- If your company uses its own deployed model, a model name might not be necessary. Please confirm with your company's developers or engineering team.

How to troubleshoot Q&A errors?

- Is the vector database installed? If not, please complete the installation and deployment of related services according to the AI Assistant Deployment Documentation.

- Can the model connect? Follow the troubleshooting steps from the previous question to check if the model can connect.

How to fill in the vector database address?

Complete the installation and deployment of related services according to the AI Assistant Deployment Documentation, no need to fill in manually.

Are other vector models supported?

Currently not supported. If needed, please contact after-sales engineers.