AI Assistant

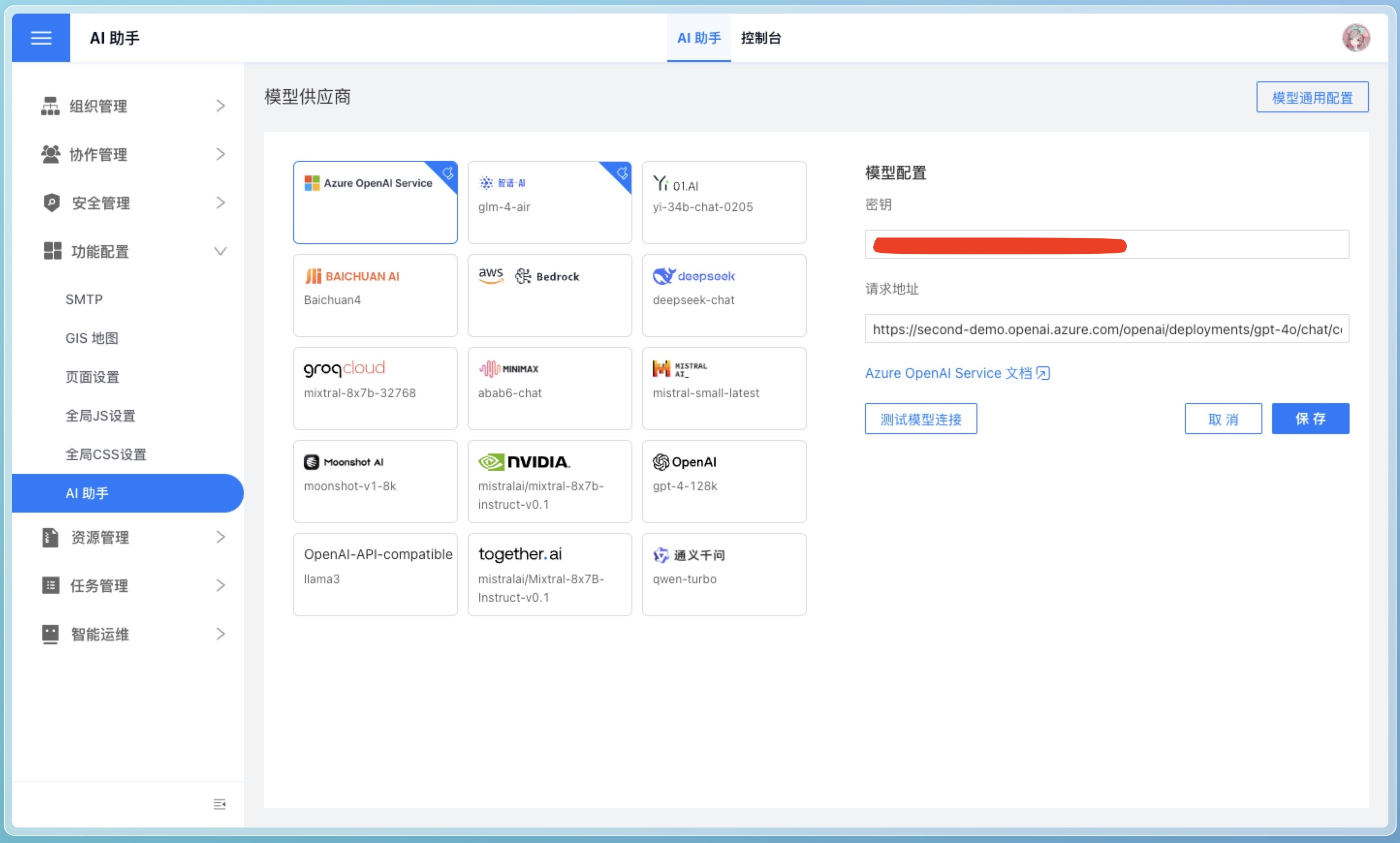

The Model Providers section introduces the model providers supported by the AI Assistant. This document mainly describes the system-level configuration options for the AI Assistant.

General Model Configuration

The following configuration items are system-level settings for the AI assistant and are not specific to any model provider.

LLM_ANALYZE_RAW_DATA

In the page configuration, it is Allow the model to analyze raw data. Its purpose is to set whether the AI assistant analyzes the raw input data. If your data is sensitive, you can disable this configuration.

LLM_ANALYZE_RAW_DATA_LIMIT

In the page configuration, it is the Allowed number of raw data rows for analysis. Its purpose is to set a limit on the number of raw data rows for analysis. This should be configured based on the processing capacity of the model provider, token limitations, and specific requirements.

LLM_ENABLE_SEED

In the page configuration, it is Use seed parameter. Its function is to control whether to enable a random seed when generating responses to bring diversity to the results.

LLM_API_SEED

In the page configuration, it is the seed parameter. Its function is to serve as the random seed number used when generating responses. Used in conjunction with LLM_ENABLE_SEED, it can be randomly specified by the user or kept as default.

LLM_SUGGEST_QUESTION_LOCALLY

In page configuration, it means Do not use the model to generate recommended questions. Its purpose is to specify whether to use a large model when generating recommended questions.

- true: Generated by local rules

- false: Generated by a large model

LLM_SELECT_ALL_FIELDS_THRESHOLD

In the page configuration, it is Allow Model to Analyze Metadata (Threshold). This parameter sets the threshold for selecting all fields. It only takes effect when LLM_SELECT_FIELDS_SHORTCUT is set to true. Adjust as needed.

LLM_SELECT_FIELDS_SHORTCUT

This parameter determines whether to skip field selection and directly select all fields to generate HQL when there are fewer fields. It is used in conjunction with LLM_SELECT_ALL_FIELDS_THRESHOLD and should be configured based on specific operational scenarios. Generally, it does not need to be set to true. However, if you are particularly sensitive to speed or want to skip the field selection step, you can disable this configuration. Note that not selecting fields may affect the accuracy of the final data query.

LLM_API_SLEEP_INTERVAL

In the page configuration, it is API Call Interval (seconds). Its purpose is to set the sleep interval between API requests, measured in seconds. Configure it based on the required request frequency. Consider setting it for large model APIs that require rate limiting.

HISTORY_LIMIT

In the page configuration, it refers to the number of consecutive conversation context entries. It determines the number of historical conversation entries carried when interacting with the large model.

LLM_ENABLE_DRIVER

In the page configuration, it is Use Model Inference Intent. Its function is to determine whether to enable AI to identify and disable questions, and optimize questions based on context. The context range is determined by HISTORY_LIMIT. Generally, it is only necessary to enable this when context referencing and the need to disable questions are required.

MAX_ITERATIONS

In the page configuration, it represents the Maximum Iterations for Model Inference. It is used to set the maximum number of iterations to control the number of failure loops when processing large models.

LLM_API_REQUIRE_JSON_RESP

Determine whether the API response format must be JSON. This configuration item is only supported by OpenAI and generally does not need to be modified.

LLM_HQL_USE_MULTI_STEPS

Whether to optimize trend-related and year-over-year/month-over-month type questions through multiple steps. Adjust as needed. Multiple steps may be relatively slower.

VECTOR_SEARCH_FIELD_VALUE_NUM_LIMIT

The upper limit on the number of distinct values for tokenized search dataset fields. Any excess distinct values will not be extracted. Set this limit as appropriate.

CHAT_BEGIN_WITH_SUGGEST_QUESTION

After jumping to analysis, will a few suggested questions be provided to the user? Enable as needed.

CHAT_END_WITH_SUGGEST_QUESTION

After each question round is answered, whether to provide the user with a few suggested questions. Enable as needed. Disabling this can save some time.

Vector Library Configuration

ENABLE_VECTOR

Enable the vector search feature. The AI assistant uses the large model API to select the most relevant examples for the question. Once vector search is enabled, the AI assistant will combine the results from the large model API and the vector search.

VECTOR_MODEL

Vectorized model, configured based on whether vector search capability is required. It needs to be used in conjunction with VECTOR_ENDPOINT. The system's built-in vector service already includes the model intfloat/multilingual-e5-base. This model does not require downloading. If other models are needed, currently, vector models available on Hugging Face are supported. It is important to note that the vector service must ensure connectivity to the Hugging Face website; otherwise, the model download will fail.

VECTOR_ENDPOINT

Vectorization API address, set based on whether vector search capability is needed. After installing the related vector database service, it defaults to the built-in vector service.

VECTOR_SEARCH_RELATIVE_FUNCTIONS

Whether to search for function descriptions related to the issue. When enabled, it will search for function descriptions related to the issue, and correspondingly, the prompt words will become larger. This switch only takes effect when ENABLE_VECTOR is enabled.

For detailed vector library configuration, see: AI Configuration

Console

In the console, we publicly display the workflow of HENGSHI ChatBI, where each node is an editable prompt. Additionally, you can directly interact with the AI assistant on this page, making it convenient for troubleshooting.

Enhancement

Editing prompts requires a certain level of understanding of large models. It is recommended that this operation be performed by a system administrator.

UserSystem Prompt

Large models possess most general knowledge, but for specific business, industry jargon, or proprietary knowledge, prompts can enhance the model's understanding.

For example, in the e-commerce field, terms like "big promotion" and "hot-selling item" may not have clear meanings to the model. Through prompts, the model's understanding of these terms can be improved.

A large model might typically interpret "big promotion" as a large-scale promotional event conducted by merchants or platforms during a specific time period. Such events are often concentrated around shopping festivals, holidays, or themed days, such as "Double 11" or "618."

If you want the model to accurately understand the meaning of "big promotion," you can explicitly define it in the prompt as referring to events like Double 11.

Conclusion Prompt Answer Summary Prompt

After the AI assistant retrieves data based on the user's query, it will prompt the large model to summarize the query results using this prompt to answer the question.

The system's default summary prompt is relatively basic. You can modify it to better align with real-world scenarios according to your business needs, specifically tailored to your company's operations.

SuggestQuestions Prompt Recommended Question Prompts

The system's default recommended question prompts are relatively basic. You can modify them to better align with actual scenarios based on your business needs, tailoring the recommended question logic prompts to be closely related to your company's business.