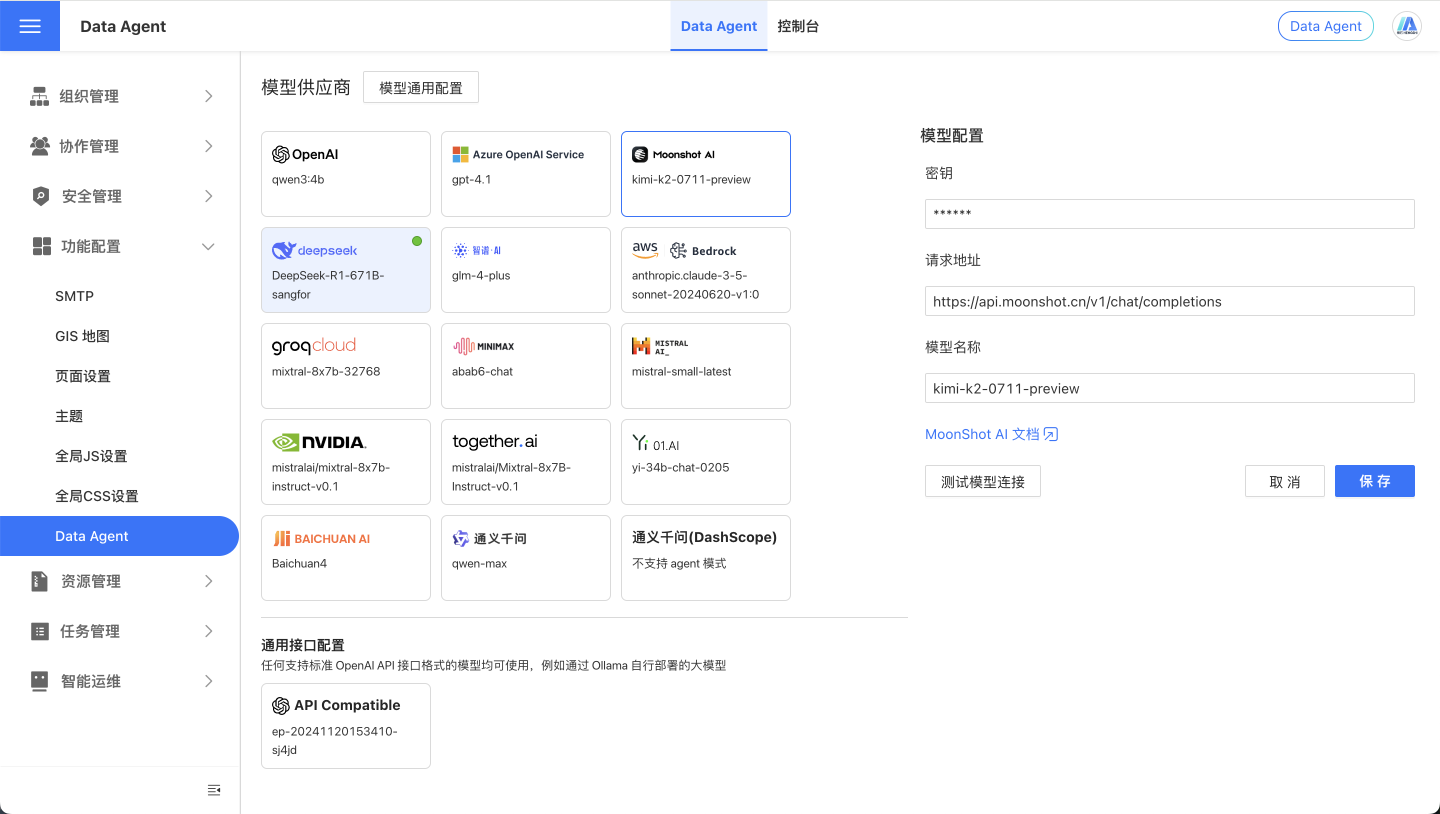

Data Agent

The Model Providers section introduces the supported model providers. This document mainly describes the system-level configuration options for the model.

General Model Configuration

The following configuration items are system-level configurations for the Data Agent and are not specific to any model provider.

LLM_ANALYZE_RAW_DATA

In the page configuration, it is Allow Model to Analyze Raw Data. Its function is to set whether the Data Agent analyzes the raw input data. If your data is sensitive, you can disable this configuration.

LLM_ANALYZE_RAW_DATA_LIMIT

In the page configuration, it is the Allowed number of raw data rows for analysis. Its purpose is to set a limit on the number of raw data rows for analysis. This should be configured based on the processing capacity of the model provider, token limitations, and specific requirements.

LLM_ENABLE_SEED

In the page configuration, it is Use seed parameter. Its purpose is to control whether to enable a random seed when generating responses to bring diversity to the results.

LLM_API_SEED

In the page configuration, it is the seed parameter. It is used as a random seed number when generating responses. Used in conjunction with LLM_ENABLE_SEED, it can be randomly specified by the user or kept as default.

LLM_SUGGEST_QUESTION_LOCALLY

In the page configuration, it is Do not use the model to generate recommended questions. Its function is to specify whether to use a large model when generating recommended questions.

- true: Generated by local rules

- false: Generated by a large model

LLM_SELECT_ALL_FIELDS_THRESHOLD

In the page configuration, it is Allow Model to Analyze Metadata (Threshold). The purpose of this parameter is to set the threshold for selecting all fields. This parameter only takes effect when LLM_SELECT_FIELDS_SHORTCUT is set to true, so adjust accordingly.

LLM_SELECT_FIELDS_SHORTCUT

This parameter determines whether to skip field selection and directly select all fields to generate HQL when there are fewer fields. It is used in conjunction with LLM_SELECT_ALL_FIELDS_THRESHOLD and should be configured based on specific operational scenarios. Generally, it does not need to be set to true. If speed is particularly critical or you want to skip the field selection step, you can disable this configuration. However, not selecting fields may affect the accuracy of the final data query.

LLM_API_SLEEP_INTERVAL

In the page configuration, it is API Call Interval (seconds). Its purpose is to set the sleep interval between API requests, measured in seconds. Configure it based on the required request frequency. Consider setting it for large model APIs that require frequency limitations.

HISTORY_LIMIT

In the page configuration, it refers to the number of consecutive conversation context entries. It determines the number of historical conversation entries carried when interacting with the large model.

MAX_ITERATIONS

In the page configuration, it is the Maximum Iterations for Model Inference. It serves as the maximum number of iterations, used to control the number of loops when handling large model failure processes.

LLM_HQL_USE_MULTI_STEPS

Whether to optimize the instruction adherence for trend, year-on-year, and month-on-month type questions through multiple steps. Set as appropriate; multiple steps may be relatively slower.

CHAT_BEGIN_WITH_SUGGEST_QUESTION

After jumping to analysis, will several suggested questions be provided to the user? Enable as needed.

CHAT_END_WITH_SUGGEST_QUESTION

After each question round, decide whether to provide the user with several suggested questions. Enable this feature as needed. Disabling it can save some time.

LLM_MAX_TOKENS

The maximum output token count for the large model, with a default value of 1000;

LLM_EXAMPLE_SIMILAR_COUNT

The limit on the number of similar examples to search for, effective in the example selection step of Workflow mode. The default value is 2.

LLM_RELATIVE_FUNCTIONS_COUNT

The limit on the number of related function searches, effective in the function selection step of Workflow mode, with a default value of 3.

LLM_SUMMARY_MAX_DATA_BYTES

The maximum number of bytes for the data portion sent when the model summarizes the results. The default value is 5000 bytes. This is effective in the summary step of Workflow mode.

LLM_ENABLE_SUMMARY

Whether to enable summarization. It is effective in the summarization step of Workflow mode, with a default value of true. If only data and charts are needed without summarization, it can be disabled to save time and costs.

LLM_RAW_DATA_MAX_VALUE_SIZE

The raw field value of the dataset will not be provided to the large language model if it exceeds a certain number of bytes. The default value is 30 bytes. Text dimensions, dates, and similar field contents are generally not very long. Providing excessively long field content, such as HTML, to the large language model is not very meaningful.

CHAT_TOKEN_MATCH_SIMILARITY_THRESHOLD

Text search similarity threshold, usually does not need to be adjusted.

CHAT_TOKEN_MATCH_WEIGHT

Text search score weight, usually does not need to be adjusted.

CHAT_VECTOR_MATCH_SIMILARITY_THRESHOLD

Vector search similarity threshold, usually does not need to be adjusted.

CHAT_VECTOR_MATCH_WEIGHT

Vector search score weight, usually does not need to be adjusted.

CHAT_ENABLE_PROHIBITED_QUESTION

Whether to enable the prohibited question feature. Once enabled, you can configure rules for prohibited questions in the UserSystem Prompt in the console. The default is false.

USE_LLM_TO_SELECT_DATASETS

Whether to use a large language model to refine the selection of datasets. The default is false. When disabled, datasets are primarily selected using vector and tokenization algorithms. When enabled, the large language model performs a secondary screening of the results from vectors and tokenization to obtain the most relevant datasets. If the selection results are unsatisfactory, consider enabling this option and defining the selection rules in Dataset Knowledge Management.

LLM_SELECT_DATASETS_NUM

The number of most relevant datasets from which the large model selects datasets. This controls the number of datasets with the highest scores from the initial vector and token screening. This configuration is meaningful only when USE_LLM_TO_SELECT_DATASETS is enabled. The default is 15.

CHAT_SYNC_TIMEOUT

The default maximum waiting time for synchronous Q&A results during API calls, in milliseconds, is 60000 milliseconds. The API request can also set the timeout in the URL parameters to override this value.

CHAT_DATE_FIELD_KEYWORDS

When there are certain keywords, if no date-type field is selected during the field selection step, a date-type field will be automatically added. The default value is "year, month, day, week, quarter, date, time, YTD, year, month, day, week, quarter, Q, date, time, change, trend, trend, trend".

CHAT_DATE_TREND_KEYWORDS

When certain keywords are present, it is determined as trend calculation. The default value is "变化,走势,趋势,trend".

CHAT_DATE_COMPARE_KEYWORDS

When certain keywords are present, it is determined as a year-over-year or month-over-month calculation. The default value is "同比,环比,growth,增量,减少,减量,异常,同期,相比,相对,波动,growth,decline,abnormal,fluctuation".

CHAT_RATIO_KEYWORDS

When certain keywords are present, it is determined as a ratio calculation. The default value is "百分比,比例,比率,占比,percentage,proportion,ratio,fraction,rate".

CHAT_FILTER_TOKENS

Filter out meaningless words during tokenization. The default value is "的,于,了,为,年,月,日,时,分,秒,季,周,,,?,;,!,在,各,是,多少,(,)".

USE_LLM_TO_SELECT_EXAMPLES

Whether to use a large language model to select examples. The default is true. This is effective in Workflow mode. The large language model will select examples with relatively higher relevance.

ENABLE_SMART_CHART_TYPE_DETECTION

Enable smart chart type detection. The default value is true. If you want all chart types to be tables, you can disable it. The rules for determining chart types based on axes are as follows:

- 1 time dimension and 1 or more measures: Line Chart

- 1 time dimension, 1 text dimension, and 1 measure: Area Chart

- 1 text dimension and 1 measure: Bar Chart

- 1 text dimension and 2 measures: Grouped Bar Chart

- Others default to Table.

ENABLE_KPI_CHART_DETERMINE_BY_DATA

Whether to modify the chart type to KPI based on the data result being a single row and single column number. The default is true. If you want all chart types to remain as tables, you can disable this option.

MEASURE_TOKENIZE_BATCH_SIZE

Batch size for tokenizing business measures. Generally, no need to modify. The default is 1000.

ENABLE_USER_ATTRIBUTE_PROMPT

Whether to enable the user attribute prompt. When enabled, relevant information will be provided to the large model based on the user attributes entered by the user. Enabled by default.

ENABLE_LLM_API_PROXY

Whether to enable the large model API proxy. Once enabled, HENGSHI SENSE can be used to call the large model's /chat/completions interface. It is enabled by default. The Agent mode also uses HENGSHI SENSE to call the large model interface.

ENABLE_TENANT_LLM_API_PROXY

Whether tenants can use the large model API proxy, enabled by default. The Agent mode also calls the large model interface through HENGSHI.

CHAT_DATA_DEFAULT_LIMIT

For AI-generated charts, if the AI does not set the limit based on semantics, the default limit is 100.

CHAT_WITH_NO_THINK_PROMPT

Whether to add the no think prompt to large model conversations. For Alibaba's Qwen3 series models, it is useful to disable thinking and improve speed. Additionally, for Zhipu's GLM-4.5 and above models, this switch also controls whether to disable thinking. The default is false, meaning thinking is enabled.

USE_FALLBACK_CHART

Whether to enable the fallback chart, which automatically generates a chart based on vector query results. The default is false. The accuracy of the default generated chart is low and is only used as a fallback solution.

USE_MAX_COMPLETION_TOKENS

Whether to replace the max_tokens parameter name with max_completion_tokens. Disabled by default. Models above GPT-5 use the max_completion_tokens parameter, which needs to be enabled.

USE_TEMPERATURE

Whether to use the temperature parameter, enabled by default. Some models do not support the temperature parameter, and it can be disabled.

ENABLE_QUESTION_REFINE

Whether to enable the user question refinement feature. When enabled, user questions will be refined before being sent to the large model. Enabled by default. Effective in Workflow mode. If the question is already specific enough, you can disable it to save time and costs.

SPLIT_FIELDS_BY_DATASET_IN_HQL_GENERATOR

Whether to list fields and metrics by dataset in HQLGenerator. Disabled by default. Effective in Workflow mode. Enabling this can improve the accuracy of field and metric selection in scenarios involving data models composed of multiple datasets, but it will increase the length of the prompt.

LLM_API_TIMEOUT_SECONDS

Timeout duration (in seconds) for large model API calls, default is 600 seconds.

NODE_AGENT_ENABLE

Whether to enable the HENGSHI AI Node Agent API feature to support the use of AI Agent via API calls. Disabled by default. Enabling this feature requires additional dependency requirements and settings. See below for details.

NODE_AGENT_TIMEOUT

HENGSHI AI Node Agent execution timeout in milliseconds, default is 600,000 milliseconds (10 minutes).

NODE_AGENT_CLIENT_ID

The HENGSHI AI Node Agent requires the HENGSHI SENSE platform API clientId for execution. This needs to be generated and configured by the system administrator, with sudo privileges.

EXPAND_AGENT_REASONING

Set whether to automatically expand the agent's reasoning process. By default, it is expanded.

PREFER_AGENT_MODE

Set whether to use the agent mode by default. The default is agent mode. When turned off, the default is workflow mode.

SCRATCH_PAD_TRIGGER

Set keywords to force the agent to use the scratch pad tool, with keywords separated by commas.

TABLE_FLEX_ROWS

Set the maximum visible range of rows in the table during a conversation, with a default value of 5.

AWS Bedrock Related Configuration

LLM_AWS_BEDROCK_REGION

AWS Bedrock region, required only if using AWS Bedrock. The default is ap-southeast-2. For details, please refer to the AWS Bedrock documentation.

LLM_ANTHROPIC_VERSION

The version number of AWS Anthropic Claude, which needs to be configured only if the AWS Anthropic Claude model is used. The default is bedrock-2023-05-31. For details, please refer to the documentation on AWS Anthropic Claude in AWS Bedrock.

Vector Library Configuration

ENABLE_VECTOR

Enable the vector search feature. The AI assistant uses the large model API to select the most relevant examples to the question. Once vector search is enabled, the AI assistant will combine the results from the large model API and the vector search.

VECTOR_MODEL

Vectorized model, set based on whether vector search capability is required. It needs to be used in conjunction with VECTOR_ENDPOINT. The system's built-in vector service already includes the model intfloat/multilingual-e5-base. This model does not require downloading. If other models are needed, currently, vector models on Huggingface are supported. It is important to note that the vector service must ensure connectivity to the Huggingface website; otherwise, the model download will fail.

VECTOR_ENDPOINT

Vectorization API address, set based on whether vector search capability is needed. After installing the related vector database service, it defaults to the built-in vector service.

VECTOR_SEARCH_RELATIVE_FUNCTIONS

Whether to search for function descriptions related to the issue. When enabled, it will search for function descriptions related to the issue, and accordingly, the prompt words will become larger. This switch only takes effect when ENABLE_VECTOR is enabled.

VECTOR_SEARCH_FIELD_VALUE_NUM_LIMIT

The upper limit of distinct values for tokenized search dataset fields. Excessive distinct values beyond the limit will not be extracted. Set as appropriate.

INIT_VECTOR_PARTITIONS_SIZE

The batch size for vectorized execution in examples. Generally, no adjustment is needed. The default is 100.

VECTOR_MODEL_KEEP_COUNT

The maximum number of historical vector models' vectorized data to retain when switching vector models. The default is 5. Generally, no adjustment is needed.

INIT_VECTOR_INTERRUPTION_THRESHOLDS

The maximum allowed number of failures when vectorizing the example library. The default is 100. Generally, no adjustment is needed.

For detailed vector library configuration, see: AI Configuration

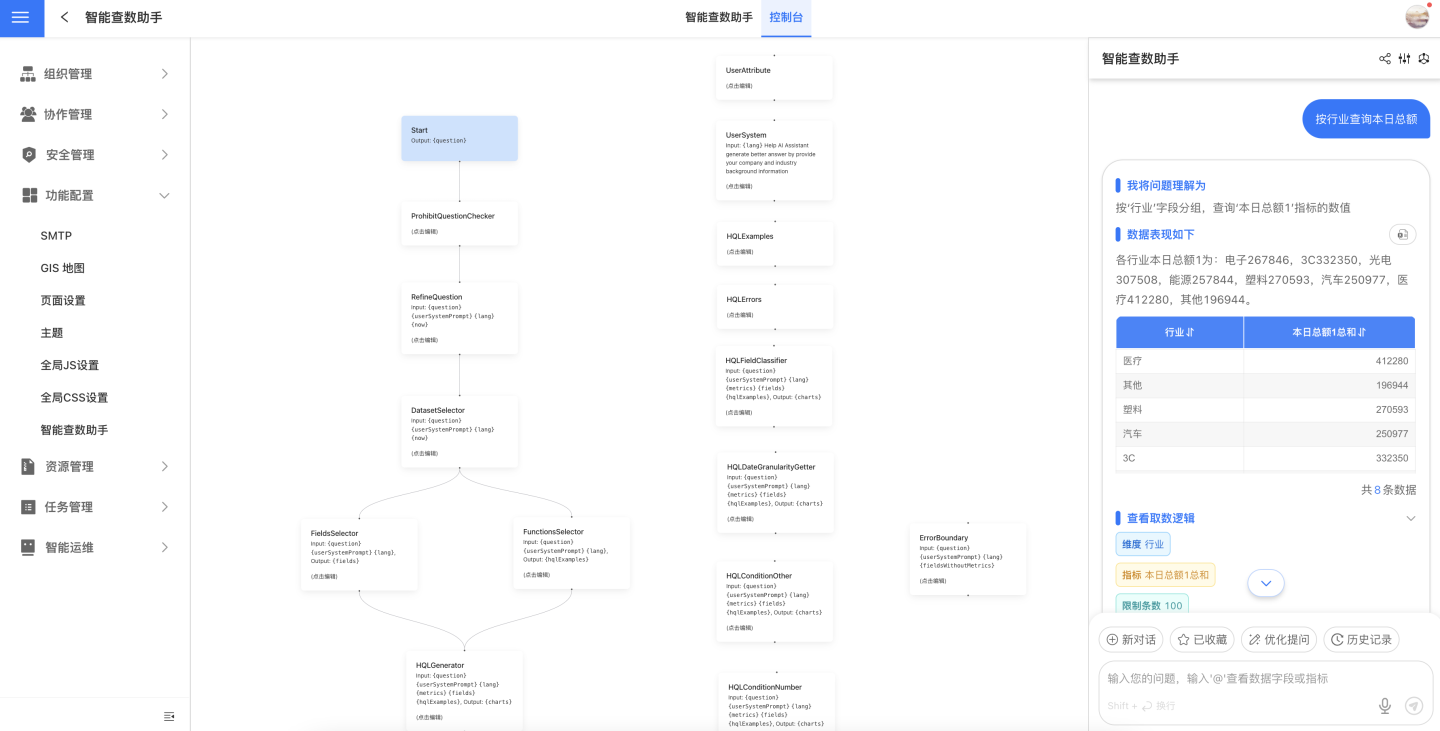

Console

In the console, we publicly display the workflow of the Data Agent workflow mode, where each node is an editable prompt. Additionally, you can directly interact with the Data Agent on this page, making it convenient for troubleshooting.

Enhancement

Editing prompts requires a certain understanding of large models, and it is recommended that this operation be performed by a system administrator.

UserSystem Prompt

Large language models possess a vast amount of general knowledge, but for specific business contexts, industry jargon, or proprietary knowledge, prompts can be used to enhance the model's understanding.

For example, in the e-commerce domain, terms like "big promotion" or "hot-selling item" may not have clear meanings to the model. By using prompts, the model's understanding of these terms can be improved.

Typically, a large language model might interpret "big promotion" as a large-scale promotional event conducted by merchants or platforms during a specific time period. Such events are often concentrated around shopping festivals, holidays, or themed days, such as "Singles' Day" or "618 Shopping Festival."

If you want the model to accurately understand the meaning of "big promotion," you can clarify in the prompt that it refers to events like Singles' Day, etc.

Conclusion Prompt Answer Summary Prompt

After the Data Agent retrieves data based on the user's query, the large model will use this prompt to summarize the query results and answer the question.

The system's default summary prompt is relatively basic. You can modify it to better align with real-world scenarios based on your business needs, making it more closely related to your company's operations.

SuggestQuestions Prompt Recommended Question Prompts

The system's default recommended question prompts are relatively basic. You can modify them to align more closely with the actual scenario based on your business needs, specifically tailoring them to be closely related to your company's business.

How to Configure AI API Calls Using Agent Mode

API calls use Workflow mode by default. If you need to use Agent mode, additional environment requirements and configurations are necessary.

Environment Requirements

- Node JS version 22 or above is required. You need to install the Node JS environment on the machine where the HENGSHI SENSE service is located to ensure the service can be invoked. Older systems, such as CentOS7, may not be supported.

Configuration Steps

- In Settings - Feature Configuration - Data Agent - General Model Configuration, enable the

NODE_AGENT_ENABLEparameter. - In Settings - Security Management - API Authorization, add an authorization, fill in the name, check the

sudofeature, and record the clientId. - In Settings - Feature Configuration - Data Agent - General Model Configuration, set the

NODE_AGENT_CLIENT_IDparameter to the clientId created in the previous step.